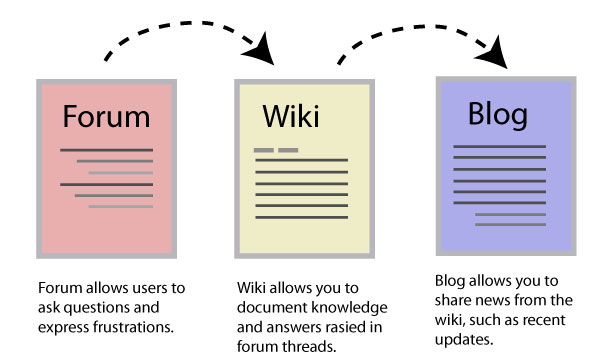

Forum → Wiki → Blog Workflow

One of the sites I'm working with lately at my job combines a forum (vBulletin), blog (Joomla), and wiki (Mediawiki) into one experience. Each of these tools does a great job at what it was designed to do. They're three separate platforms skinned and linked together.

I used to think the site was a hodgepodge of software platforms, but now I see that these three resources can harmonize together in an amazing way.

Here's the interaction in a little more detail:

- Forum: Users contribute openly and regularly to the forum, creating at least several new threads and probably 15 new responses a day. Users feel comfortable posting and responding to forum questions, because they aren't making official remarks about any topic. They're offering their thoughts, or asking questions. It's an informal medium that is inviting and comfortable.

- Wiki: The conversations on the forum drive needs in the wiki. Answers and resolutions from the most popular forum threads should be transferred to the wiki as official articles. Transferring this content requires you to organize and articulate the information, which isn't always easy. So admittedly this transfer isn't often done with the site I mentioned, but if someone were designated into this role, it could be powerful.

- Blog: The blog showcases the information recently added to the wiki. The blog can also serve as a voice to energize a community, to call attention to needs on the wiki, or to bring other news to users.

I never considered how well these tools work together, but they do. The different mediums allow users to interact in ways that suit them. Of course it would be nice to have one tool that has an incredibly powerful blog, wiki, and forum wrapped up into one package. Some wiki platforms provide all three, such as Tiki Wiki. But swiss-army knife tools almost invariably perform much like an on/off road motorcycle.

The drawback of having three sources for content, however, is that content published on one source may never make it to the other sources. For example, if I write a blog article about a new application, shouldn't that content also appear as an article on the wiki? If a forum thread clarifies a topic, shouldn't that clarification be added to a wiki article? If I add a new wiki section, shouldn't that section be announced and summarized, as well as explained, on the blog? Content overlap becomes a problem. So does search.

Regardless of the overlap problem, combining a forum with a wiki and blog has tangible benefits. It helps solve the participation problem with wikis. Users are more comfortable asking a question in a forum rather than changing the original content of an article. Wiki admins can harvest information from these forum threads to strengthen the information of the wiki. Significant new wiki information should be announced to users on the blog.

About Tom Johnson

I'm an API technical writer based in the Seattle area. On this blog, I write about topics related to technical writing and communication — such as software documentation, API documentation, AI, information architecture, content strategy, writing processes, plain language, tech comm careers, and more. Check out my API documentation course if you're looking for more info about documenting APIs. Or see my posts on AI and AI course section for more on the latest in AI and tech comm.

If you're a technical writer and want to keep on top of the latest trends in the tech comm, be sure to subscribe to email updates below. You can also learn more about me or contact me. Finally, note that the opinions I express on my blog are my own points of view, not that of my employer.