Technical Communication Metrics: What Should You Track?

In 2004, when I returned from a teaching stint in Egypt and began working as a copywriter for a health company in Clearwater, Florida, my manager insisted that I track something related to my writing. We decided that I would track word count, because this was the easiest thing to track.

Each week, I graphed the number of words I published, and during a weekly meeting, I held up my graph. If the number decreased for the week, I formatted the arrow red. If it increased, I formatted the arrow black.

My graphs regularly alternated between black and red arrows, and I found the whole exercise somewhat amusing and ridiculous. But I went along with it, because everyone was tracking something. We all had to create these little charts that we held up in weekly status report meetings.

Despite my cavalier attitude toward this word count tracking, I can tell you that I wrote more words than my manager could process by far. After working there several months, I had built up such a mountain of content -- press releases, radio pitches, product descriptions, newsletter articles, pamphlets, e-mail campaigns -- that my output was undeniable in size. I do think that holding up the silly little graphs each week had some impact on my determination to write.

Lately I have been trying to figure out the right metrics for my role as a technical writer. A lot has been written about metrics and technical communication. Many technical writers have struggled to define meaningful metrics, either because of a requirement imposed by managers or otherwise. One of the most common goals with metrics is to connect writing activities to financial figures, since this allows technical writers to establish value in a quantitative way that speaks to senior leaders.

A few possible metrics

Despite the need for these metrics, coming up with a sound way to measure the value of technical writing is a problem that remains elusive as ever. The following are several possible ways to measure the value of technical writing:

Support costs. No group has more metrics associated with it than help desks. They meticulously track the number of calls coming in, the product the call is about, and the estimated cost of each call. If a software application receives 3,000 calls a month, and each support call costs $25, the software costs the company about $75,000 a month in support costs.

How documentation affects support costs can be tricky to estimate because a lot of factors come into play:

- Support calls spike when a product is initially released.

- Support calls drop over time as users become more familiar with the application.

- Not all support calls are resolvable through help material.

- It's hard to determine the influence of help material without a similar application that has no help material.

Helpfulness ratings. Another technique might be to embed a "Was this topic helpful?" question in your documentation. Then count the number of people who indicated that the topics helped them. You could measure your own success based on the number of yes responses versus no responses. If you equated each "yes" response with the cost of a support call, you could make a case that documentation is saving the company that amount in support costs.

For example, if 100 people indicated that topics were helpful, and half of those people might have called the support center without the help ($25 a call), that means documentation contributed about $2,500.

Unfortunately, people are always more willing to engage in feedback when it's negative rather than positive. We love to complain more than praise, so I'm not sure how accurate this method would be.

Page hits. Page hits to help material aren't a direct indicator of success, but what if you're publishing web articles that double as marketing collateral? If you take the tips and how-to's from the help, you can publish these on your corporate blog. Hits to these articles can be quantified and converted into a financial figure.

For example, let's say you publish an article that receives 10,000 hits. Google might charge 25 cents a hit in a pay-per-click campaign to generate an equal number of hits, so the financial worth of that article you published is at least $2,500, plus the effort to write the article. If you publish 50 articles like this a year, you're contributing a value of about $125,000.

Word count. You can also estimate the value of your contribution by measuring your word output, and then multiply this output by a cost number. For example, let's say that in one week, you write 5,000 words. In the freelance world, a writer might charge about $75 to write 200 words. Therefore you can estimate the value of your contributions at about $1,875 dollars for the week. Of course, this method assumes that research, SME interviews, explorations of the system, and other non-writing activities are all quantified in that initial word output.

Most of these measures connect with a financial value, but if establishing financial value isn't important, you could still measure a great many things related to your role.

What you track, you focus on

One of the problems with metrics is that we technical writers are so detail-oriented, we often get discouraged by the inability to track our contribution to the bottom line with a fair degree of accuracy. Consequently, we often don't track anything at all -- while still remaining passionate that our contributions make a significant impact.

If we dismiss metrics because they are slippery and inaccurate, we are selling ourselves short. Here's the secret about metrics: what you track, you soon care deeply about. At work I started tracking the number of words I publish every week. As a result, I've noticed that I have become more focused on my output. During the day, if I haven't published anything, I start to feel lazy and unproductive. I have to get something out there, something written and published. I also don't let half-written things languish. I see them through to publication.

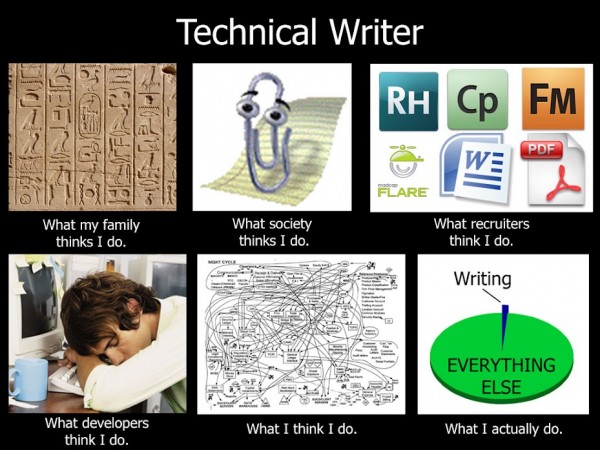

But here's the problem in tracking word count. Most of the non-writing activities I do lose their value because I am no longer tracking those activities. This can be an important consideration when you consider that technical writers don't actually write much of the time. I don't know if this is a travesty of the meeting-filled corporate life, or just the nature of the job. But take a look at this graphic that Mike Landry sent me last week:

As you can see, technical writers spend very little time writing, maybe at most 10 percent of their day. Often the more senior level you are, the less you write. This graphic accurately describes my life as a technical writer. It's easy to let my day fill up with non-writing activities, such as the following:

- Attending scrum meetings

- Meeting with developers to talk about how an application works

- Discussing content that interns are writing

- Figuring out if I should jailbreak my iPhone to record mobile app screencasts

- Discussing IP omissions with released applications

- Talking about social media strategies we don't have (e.g., should we engage shallowly on multiple channels, or deeply on one?)

- Working on team mission statement

- Printing out latest Intercom magazine issue on information architecture

- Figuring out how to add captions to linked images in Mediawiki

- Reviewing the latest changes to the wiki

- Gathering accomplishment highlights for previous month

- Reviewing forum posts from volunteer testers for applications in beta

- Editing existing help to include gotcha notes and known limitations clauses

- Strategizing about upcoming projects and wondering how to get funding

- Responding to various e-mail messages to clear out inbox

And before you know it, it's 5:30 pm and the day is over without having written or produced anything substantial. However, if you have a metric you're tracking, you suddenly become accountable. All those non-writing activities lose their value. If your goal is to publish 1,000 words a day, everything else you do is no longer the first priority. That's what's interesting about metrics: what you choose to track changes how you prioritize the activity. Therefore, you must think carefully about what you want to track.

The question, then, is not only what can you track, but what should you track. I am convinced that everyone should track something. Tracking can help you dramatically improve your performance in what you track. This year, in reaching to find some meaningful metric, I am currently tracking anything I can easily measure. Since I play both technical writing and marketing roles at work, I have more things available to track: words published, overall site traffic, individual article traffic, articles published, volunteer word count, RSS followers, Twitter followers, Facebook likes, Google + joins, the number of social media updates, the number of articles published, the dates for publication, and the number of volunteers who joined projects.

Of all these metrics, what is the most important to focus on? What is it that turns the wheel to make all of these numbers move forward? What is the driving force that accelerates social media growth, new visitors, and subscribers? Published content plays a huge role in driving these numbers -- good quality content that aligns with our reader's/user's interests. The more I publish, the more each of these numbers goes up. So are words published the most important thing to track?

The consensus is against word count

Despite the grueling focus that tracking word count provides, the literature on the subject is decidedly against word count as a metric in tech comm. In fact, I have not read a single article or blog post that recommends tracking word count at all. Here's a bit of research on the subject that I've culled from Intercom, Technical Communication Journal, LinkedIn, and elsewhere:

Research indicates that no industry standards are available for technical writer productivity rates. Some practices, such as page counts, have proven to be counter-productive in our experience. If a writer is evaluated by number of pages, page counts may tend to increase to the detriment of quality. In many projects, reducing page count should be the goal. Page counts also do not take into account the varying complexity levels of different deliverables; realistically, it takes longer to produce a page of highly technical material compared to user help. ("Measuring Productivity," by Pam Swanwick and Juliet Leckenby. Intercom. September/October 2010. p. 9. URL: http://intercom.stc.org/2010/09/measuring-productivity/)

One group sees productivity metrics as dangerous. They once tracked pages per year per writer, but found the algorithm of little value. They believe that focusing their efforts on customer satisfaction is more important than counting departmental pages-per-week throughput. ("Documentation and Training Productivity Benchmarks," by John P. "Jack" Barr and Stephanie Rosenbaum. Technical Communication Journal. Volume 50, No. 4, Nov 2003. P. 470)

The obvious, easily measured metrics are generally not very useful. For instance, there's a temptation to measure technical writers by the gross output or superficial productivity—pages per day or topics per hour, respectively. These metrics are seductive because they are easy to calculate. But it's an axiom of management that people will focus on whatever is measured. If you judge people by page count, they will produce lots and lots of pages. (Many of us succumbed to the “make the font bigger” approach in high school to fill out required pages for writing assignments.) If you measure writers by the number of topics they produce, you can expect to see lots and lots of tiny topics. Furthermore, this raw measurement of productivity doesn't measure document quality. ("Managing Technical Communicators in an XML Environment," by Sarah O'Keefe. Scriptorium.)

At Sabre Computer Reservation Company, for example, Blackwell (1995) shows how task analysis by a team of professional writers resulted in reduction of the number of pages in one manual from 100 to 20--and the consequent savings in production costs of nearly $19,000, more than paying for the effort that went into the task analysis. Note too the importance of measuring writing productivity in units other than pages per unit of time--had such a measure been used here, writer productivity would appear to have plunged. In fact, the rest of the article makes clear, the shorter manual was a great improvement on the original, and resulted in improved customer acceptance of the product. ("Measuring the Value added by Technical Documentation: A Review of Research and Practice." by Jay Mead. Third Quarter 1998, Technical Communication Journal. p. 361-2.)

A question posted on Linkedin -- What metrics do you use for technical writing? -- had a number of responses that dismissed page count as a measure as well:

If writers are evaluated on the number of pages they produce, they'll give you as many pages as they can churn out, not as many as the deliverable needs in order to be optimally useful to readers. Douglass H.

I would first agree that page count has no place in the discussion. There is frequently an inverse relationship between quantity and quality -- at least when viewed in the context of two versions of the same document. Paul M. A.

Measuring "page-count" is ridiculous. Writers can churn out pages and pages of drivel and gobbledygook to generate "pagecount". Editors can delete pages and pages of content to generate "pagecount". A better measure is the readers' view of the documentation. Do they like it? Can they use it to solve their problems? Dave G.

As you yourself (and several responders) have commented already, page count is a terrible way to measure productivity, especially as shorter often means better. For example, I spent several months *reducing* a user guide from 240 pages t0 80, removing the repetition and waffle... The only useful matrices for documentation are those that measure comprehension. Julian M.

Finally, perhaps nothing is as persuasive against tracking word count as this simple Dilbert cartoon.

Metrics need to measure quality

Besides the points raised earlier, measuring word count has other drawbacks. If you're focusing only on word count, you're not focusing on the problem. You may just be publishing more and more text, without analyzing whether the text is solving a business need or problem.

If you're going to measure word count (quantity), you need to include some other important factors into your metrics. Jack Barr and Stephanie Rosenbaum define productivity with this equation:

(Quality X Quantity) / Time = Productivity.

("Documentation and Training Productivity Benchmarks," by John P. "Jack" Barr and Stephanie Rosenbaum. Volume 50, No. 4, Nov 2003. See p.471)

This is the problem with measuring only word count: without a measure of your word count against some sort of result, there's no way to determine whether you're being productive. You can only be productive if you make progress toward an intended result. Merely publishing more content isn't necessarily a worthy goal in itself. The goal needs to tie to a larger objective, such as reducing support costs, increasing customer satisfaction, or improving user performance. These are all measures of quality.

To judge quality, some connection with users must be factored into a measurement; otherwise the quality measure is hollow. (Technical writers could measure the quality of each other's work, but it's better to have the actual user's feedback.) The problem is that measuring the effect on users is hard to do, so we often skip it. Instead, we make a leap to believe that publishing content will affect users in a positive way.

The widespread omission of any kind of user testing with help content has been unfortunate. In our discipline, had we been conducting user tests with our help content all along, we would have probably abandoned many unproductive forms of documentation (for example, maybe the long manual) and sought other solutions earlier (such as multimedia instruction).

Saul Carliner notes that although testing for documentation usability is important for measuring quality, "the majority of those that did said that they test less than 10% of their products" ("What Do We Manage? A Survey of the Management Portfolios of Large Technical Communication Groups." Technical Communication Journal, 51:1. Feb 2004. p. 52).

However you do it -- testing content with users in usability labs, surveying satisfaction ratings among users, or embedding surveys into help topics -- it's important to measure quality through some kind of user interaction. Did our efforts reduce support calls? Were users pleased with the help material? Did it increase usage and adoption of the application? When you can introduce a quality measurement in the metric, it makes the tracking activity meaningful.

Can we still measure word count?

I wouldn't dismiss measuring word count altogether. Instead, I would multiply word count by the weight of the deliverable. A quick reference guide might be multiplied by 10, and a video by 20 -- or something similar. It wouldn't be hard to draw up a list of deliverables and multiply them by an appropriate weight measure to render them into output units. I mentioned quick reference guides and videos, but I could equally include other activities, such as social media updates, branding of help platforms, or formulation of strategies.

There are plenty of metrics that incorporate complicated algorithms to define a unit of work. For example, Pam Swanwick and Juliet Leckenby use the following formula:

(# topics or pages) x (complexity of deliverable) x (% of change)

+ (% time spent on special projects)

x (job grade)

("Measuring Technical Writer Productivity." Feb 2011. Writing Assist, Inc. http://www.writingassist.com/newsroom/measuring-technical-writer-productivity/)

Converting any kind of output into a unit of work helps you avoid deprioritizing every non-writing task. (On the other hand, if you want to prioritize writing so that you don't end up like the Mike Landry graphic shows -- with writing occupying just a sliver of your day -- then you might consider counting words alone. It all depends on what you want to prioritize.)

Since measuring quality is integral to a metrics analysis, I plan to embed surveys into help material to gather feedback from users. Our usability group uses Loop11 to conduct regular usability tests (such as this affinity diagramming study). If I could embed this Loop11 survey into a template on the wiki, and then insert the template into specific wiki help pages, this would help me assess the quality of the content. I could also bring in people off the street, so to speak, and ask them to evaluate the help material based on a list of questions, but my preference is for real users to assess the help in an actual scenarios.

Conclusion

In most articles, metrics are used by managers to evaluate employee performance. Or they're used by tech comm departments to justify hiring and budgets. Few approach metrics as a way for individual contributors to establish a meaningful measure for their productivity and success. Yet for me, this is perhaps the main motivation I have for tracking metrics. I know that what I track, I can improve. And this improvement can take me to the next level.

I am interested to hear what metrics you track, and the results of the metrics in your company.

About Tom Johnson

I'm an API technical writer based in the Seattle area. On this blog, I write about topics related to technical writing and communication — such as software documentation, API documentation, AI, information architecture, content strategy, writing processes, plain language, tech comm careers, and more. Check out my API documentation course if you're looking for more info about documenting APIs. Or see my posts on AI and AI course section for more on the latest in AI and tech comm.

If you're a technical writer and want to keep on top of the latest trends in the tech comm, be sure to subscribe to email updates below. You can also learn more about me or contact me. Finally, note that the opinions I express on my blog are my own points of view, not that of my employer.