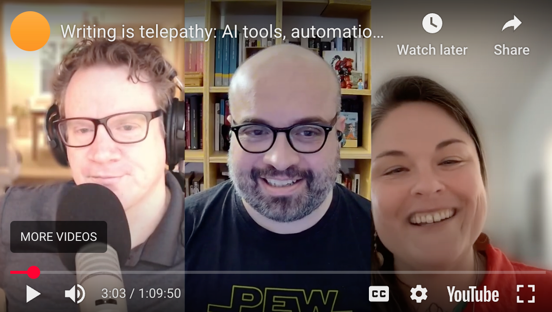

In this episode, Fabrizio (passo.uno) and I talk with CT Smith, who writes on a blog at docsgoblin.com and works as a documentation lead for Payabli. Our conversation covers how CT uses AI tools like Claude in her documentation workflow, why she builds tooling that doesn't depend on AI, her many doc-related projects and experiments, and how she balances a tech writing career with an intentionally offline life in rural Tennessee. We also get into reading habits, the fear of skill atrophy from AI reliance, and where the tech writer role might be headed.

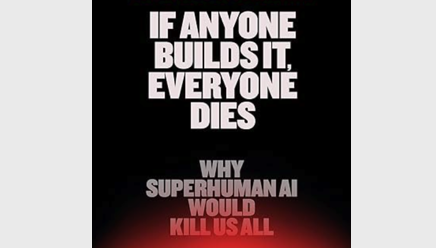

In If Anyone Builds It, Everyone Dies, Eliezer Yudkowsky, founder of the Machine Intelligence Research Institute (MIRI), and Nate Soares, its president, argue that superintelligent AI will lead to humanity's extinction. In the same way that humans used their intelligence to dominate all other forms of life, so too will superintelligent AI surpass and dominate humans. As a dominant entity, AI will likely operate with an alien set of preferences and values, and humans won't be important to superintelligent AI's goals.

As we head into the new year, I'd like to make a few tech comm predictions for 2026. I'm focusing my predictions within tech comm and also basing them off my own experience. In this post I also broaden out my scope a bit and comment on some wider issues and trends in a more opinionated way. While I'm basing these ideas on emerging research, this is a blog post, not a peer-reviewed journal article, so my predictions are speculative and based on general vibes.

As I sit down to write, I have a hodgepodge of ideas spewing in my head, but none that has taken hold in any immersive way. Usually a blog post has a single topic of focus, and I try to go somewhat deep into it. But this approach can be problematic: If I don't have an idea that catches my attention, I feel I have nothing to write about. Hence, I'll skip my writing time “until the muse strikes” or something. But then days pass without the muse striking, and I start to wonder if I've gone about the creative process all wrong.

This is a recording of our AI Book Club discussion of Co-Intelligence: Living and Working with AI by Ethan Mollick, held Dec 14, 2025. Our discussion touches upon a variety of topics, including the educator's lens, cautious optimism, the jagged frontier, personas, pedagogy, takeaways, and more. This post also provides discussion questions, a transcript, and terms and definitions from the book.

Co-Intelligence by Ethan Mollick isn't a riveting, controversial book on AI that will ratchet up your p-doom or send your head spinning about misaligned alien intelligences conspiring against humanity. Part of the appeal of the book is that so many of Mollick's observations seem like common sense, as they're born from someone who's working with and using AI on a daily basis for a variety of domain-specific tasks relating to his profession.

I recently read The War on Words: 10 Arguments Against Free Speech—And Why They Fail by Greg Lukianoff and Nadine Strossen, as part of the Seattle Intellectual Book Club. The book counters various arguments against free speech, overall presenting the case that free speech should be protected in most cases except obviously damaging scenarios like defamation, fraud, or immediate physical harm. In this post, I reflect on the costs of speaking openly, especially in the age of cancel culture, where you might not run afoul of the law but can still lose your job or face intense online animosity.

Isolation is something I've been thinking about lately. Although I have an abundant professional network and support probably 100+ engineers, PMs, and others, at times I do experience a sense of isolation in my role. I'm not sure if it's the holidays, or because now that I'm 50, I'm apparently at the bottom of the "U-shaped happiness curve," but I'm trying to understand how to navigate a world where my relationships with colleagues are increasingly transactional (purely information-based) and lack more social aspects. There are several reasons for isolation, and good reason to believe that AI will only increase our isolation.

In this episode, Fabrizio (from passo.uno) and I discuss the concept of documentation theater with auto-generated wikis, why visual IDEs like Antigravity beat CLIs for writing, and the liberation vs. acceleration paradox where AI speeds up work but creates review bottlenecks. We also explore the dilemmas of labeling AI usage, why AI needs a good base of existing docs to function well, and how technical writers can stop doing plumbing work and start focusing on more high-value strategic initiatives instead (efforts that might push the limits of what AI can even do). This post also contains a lot of short clips and segments from the episode, along with article links and a transcript.

This is a recording of our AI Book Club discussion of Nexus: A Brief History of Information Networks from the Stone Age to AI by Yuval Noah Harari, held Nov 16, 2025. Our discussion touches upon a variety of topics, including self-correcting mechanisms, alien intelligence, corporate surveillance, algorithms, doomerism, stories and lists, democracy, printing press, alignment, dictator's dilemma, and more. This post also provides discussion questions, a transcript, and terms and definitions from the book.

One of the things I'm doing this week, which has thrown me off my content productivity track, is trying to fix some errors in build logs for my reference docs. I have an SDK that has 8 different proto-based APIs; each API has its own reference documentation. The build script I run (to generate the reference docs) takes about 20 minutes and creates the HTML reference documentation for each API. The only problem is that I recently realized that the build script has some errors.

I just finished Yuval Noah Harari's Nexus: A Brief History of Information Networks from the Stone Age. The book provides a high-level analysis of information systems throughout history, with some warnings about the dangers of AI on today's systems. It's a remarkable book with many historical insights and interpretations that made history click for me. But the central idea of the book focuses on self-correcting mechanisms (SCMs) and how these SCMs are the linchpin of thriving democracies, so that's what I'll focus on in my review. The book also argues that AI is a form of alien intelligence that might incorrectly execute goals we don't want it to follow.

Tracking and communicating AI usage in docs turns out to be not only challenging technically, but also potentially full of interpretive pitfalls. There seems to be a double-edged sword at work. On the one hand, we want to track the degree to which AI is being used in doc work so we can quantify, measure, and evaluate the impact of AI. On the other hand, if a tech writer calls out that they used AI for a documentation changelist, it might falsely create the impression that AI did all the work, reducing the value of including the human at all. In this post, I'll explore these dilemmas.

As AI agents become more capable, there's growing eagerness to develop long-running tasks that operate autonomously with minimal human intervention. However, my experience suggests this fully autonomous mode doesn't apply to most documentation work. Most of my doc tasks, when I engage with AI, require constant iterative decision-making, course corrections, and collaborative problem-solving—more like a winding conversation with a thought partner than a straight-line prompt-to-result process. This human-in-the-loop requirement is why AI augments rather than replaces technical writers.

In this thoughtful guest post, Jeremy Rosselot-Merritt, an assistant professor at James Madison University, wrestles with generative AI and its impact on the technical writing profession. Jeremy examines risks such as decisions being made by leaders who don't understand the variety and complexity of the tech writer role, or the perceived slowness of output from human writers compared to the scale of output from LLMs. Overall, Jeremy argues that Gen AI is another point on a long timeline of tech writers adapting to evolving tools and strategies (possibly now emphasizing context engineering), and he's confident tech writers will also adapt and continue as a profession.