Some Wiki Basics (Wikis)

Those are the two forces that brought me to wikis: the need for shared ownership of content, and the need for constant updates based on evolving information. Wikis seemed like the perfect platform for this new approach.

There are more than 100 types of wikis (see the wiki matrix), though only a handful are worth considering. Confluence is probably the wiki most suited to documentation purposes, but our organization already had a couple of wiki platforms available: SharePoint and Mediawiki. (SharePoint for internal infrastructure, Mediawiki for community collaboration.) I've tried both, and each has its advantages and disadvantages.

Regardless of the wiki platform, most wikis more or less have a similar set of features. I'll talk briefly about a dozen wiki features in the context of Mediawiki, which is the same wiki platform that Wikipedia runs on. Mediawiki is open source wiki software, and widely used across the Internet. You can install Mediawiki yourself in about five minutes.

When you work with wikis, here are some things you'll find different from authoring with regular help authoring tools:

- Content ownership

- User pages

- Authoritative content

- Recent Changes

- Discussion Pages

- Everything on One Site

- Wiki Syntax

- Translation

- Content re-use

- Managing Content

- Categories

I'll briefly explain each of these features before diving into the more important topic of community collaboration.

Content Ownership

No one truly owns the content on a wiki. Typically you “watch” pages that you're interested in. For example, if you published documentation on the ACME widget, you would most likely watch all of the ACME widget pages. If someone makes a change you don't like, you can undo the change.

Some wikis have a built-in workflow that forces revisions into a review state before the edits are live. However, most wikis try to avoid this publishing bottleneck and work on a model of trust. Most of the time, the edits are beneficial so we allow them by default. No one owns the content, so no one should enforce restrictions on editing the content.

Community ownership sounds ideal, but it has some drawbacks. Mainly, it's easy for someone to create pages based on a current need, and then completely neglect the page when updates are needed. For example, let's say you currently have a project and you need to get some information out about it for others who are participating. The wiki works great for this, as it allows quick and easy publishing. It's almost an adrenaline rush to see your content published so quickly. Everyone can access and edit it, and it seems like a winning situation all around.

Then the project ends, and you no longer have a need for the content. Rather than go through and delete the content, as well as any links pointing into the content, it's much easier to simply abandon those wiki pages, because after all, you don't specifically own them anymore; they're on a wiki, so the community owns them.

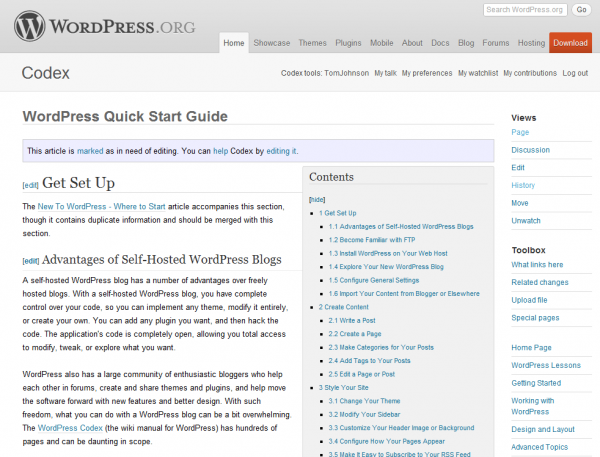

For example, a few years ago I wrote a lengthy quick start guide for WordPress. I published it on the WordPress Codex here: WordPress Quick Start Guide.

Who actually owns the content? Although I originally wrote it, I contributed it with a Creative Commons license so I no longer own it. At first, it was cool to see my content available in a public setting. I didn't need to get any permission ahead of time. It was just up and available as soon as I published the page.

But then WordPress came out with a new version, and another new version. I didn't bother to update the original content to match the later versions of WordPress. Eventually the content became out of date. Some people edited some parts of my WordPress Quick Start Guide, but with no one really invested in it, with no true ownership of the content, no one felt a responsibility to update all the content to keep it current.

The lack of ownership can lead to many wiki pages being out of date. Many times a wiki has so many pages, it's hard to tell who authored what, and whether the pages are still needed, or whether they're up to date.

Although community ownership is a neat ideal, ultimately each wiki should have a chief content owner, someone who is dedicated to the site as a whole and keeps a close eye on all the content assets. Without dedicated managers to review and update the content, wikis can quickly expand into bloated, outdated content messes.

On the flip side, when the community owns the content, community members can also keep content up to date when the original author flounders. Many times the original author isn't aware of all the places that content is out of date. As community members use the documentation, they often find places that need updating. Because the content is on a wiki, they can quickly and easily make these updates.

Withdrawing from tight control and ownership is a hard transition for many to make. One of the writers in another department at my work uses our wiki to publish some documentation. At the top of the documentation he added a note asking that no one delete or edit the content on the page, since it's official documentation.

I think he doesn't really agree with the underlying philosophy of a wiki. That philosophy is this: When you publish content on a wiki, it no longer belongs to you. It belongs to the community. Others can update the content as much as they want, without feeling that they are infringing on your content. It's not as if others are opening a file on your computer and making unsolicited edits. Instead, the content is owned by everyone, and everyone has a right to edit it.

User Pages

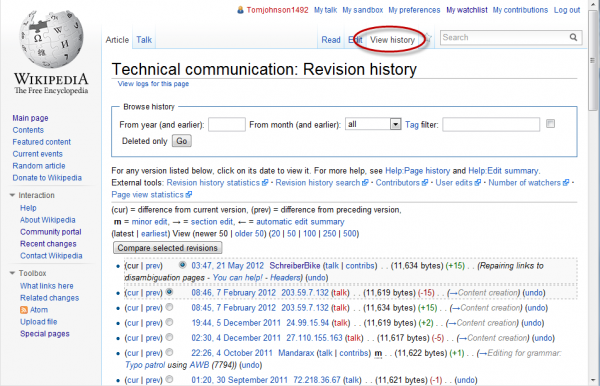

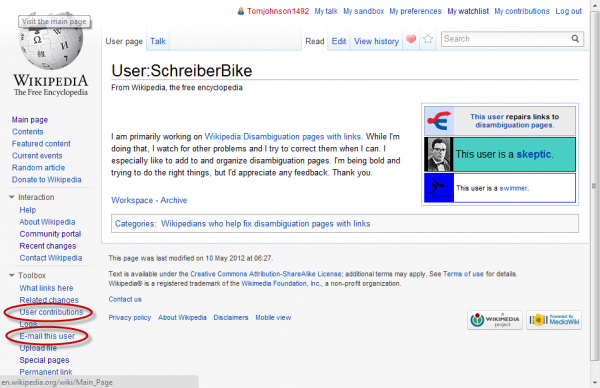

Because everyone can be making edits, it's important to track who makes edits to which pages and when. With Mediawiki, each user has a “user page.” When you make an edit, the page has a version history showing the user names of each user who made changes to the page. Each user name links to the user page.

Go to any Wikipedia page (such as the Technical communication page) and click the View history link. You will see a list of all the users who made changes to the page. If the user doesn't have a user page (because the user never logged in and created an account), the version history shows the user's IP address. You can usually click the user page to read a little bit more about the user.

From the user page on a Mediawiki site, click the User contributions link in the sidebar to see other edits the user has made on the wiki. You can also click the E-mail this user link to send an e-mail to the user through the wiki interface.

A lot of times, if you're unsure about the edits someone is making, you can assess the person's authority and credibility from his or her user page. The history of a page also lays bare all the people who contributed to the content, so you can read about the people who shaped the page's information.

As you look through the history of a page, you can see how many characters someone added or removed. A lot of times, edits are trivial, correcting a misspelled word or adding a missing word. When you see that someone has added 1,000 characters or more, the edits are more significant. In my experience, 90% of the edits are minor, such as adding a comma or capitalizing a word. Rarely do people make extensive edits. Writing is a lot of work, after all.

Authoritative Content

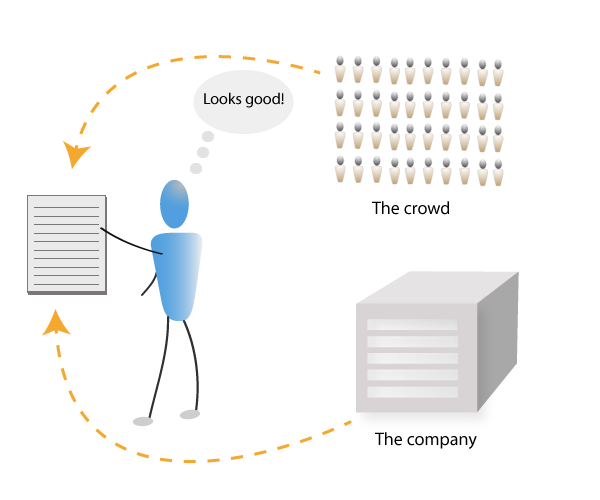

As I've worked with project managers to sell them on the idea of a wiki for documentation, many balk at the idea of giving users the ability to edit content. They feel users might introduce errors, communicate points poorly, and in general lower the authoritative quality of the content.

I've heard some users ask how they can know what content is official and what content originates from the community. With wikis, users may be left to wonder about the source of policies, procedures, and other material. Do they come from the company, or other users? Companies give up some of this authority when they move to a wiki.

The lack of authority may or may not be a big deal. It depends more on your product. If you're a pharmaceutical company, where you could be held liable for the information on your site, a wiki is probably not the way to go. And if you're a financial company or in some other regulated industry that must maintain tight control over the information, you probably need a more secure, authoritative method for maintaining and publishing your content.

However, in many cases, users are accustomed to getting information from other users. Users often resort to google to search for how-to information anyway rather than looking at the authoritative content published by the company. Ultimately, with instructional content, the source does not so much matter as much as the accuracy of the content. As a user reads and acts on the material, if it's inaccurate or confusing, it doesn't matter who it's from. Users are more interested in simple, relevant instructions that speak to their specific situation.

Recent Changes

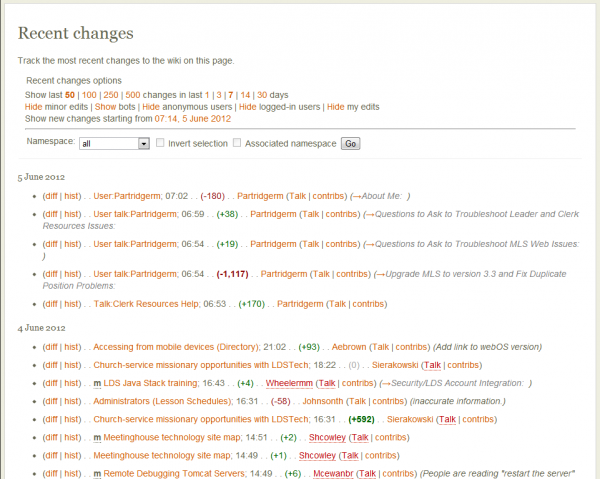

Remember that wikis are in a constant state of flux. You hope that the information continues to evolve into a more and more accurate, thorough, helpful state. So after you publish content, you're not simply done with the content. Instead, you must keep an eye on the changes. You usually do this by regularly checking the Recent changes.

If you go to a Wikipedia and click the Recent changes link in the sidebar, you can see all the changes taking place on the wiki. The rate of change on Wikipedia is pretty astounding, with thousands of changes taking place each day. The number of changes you see is proportional to the size of your wiki and the activity of your community.

On our organization's wiki of about 1,000 pages, we typically see about 15 to 20 edits a day, usually from 3 to 4 different people. You can monitor the recent changes through an RSS feed, or you can even set up alerts to be notified of every single change that takes place on the wiki.

When you come into work in the morning, just as you check your e-mail, you also check the Recent Changes. It is somewhat astonishing to see changes take place in seemingly random ways on a large variety of pages. People are out there at all hours of the day, reading your content and making updates when you least expect it.

Each page has a detailed version history, so when you see a change, you typically compare the previous version with the new version to see what has changed. Users are prompted to write a summary of their changes to speed this content review. For example, a user might note that he or she added some punctuation, or clarified a section. If you know and trust the user, you can probably just assume that the edit is a beneficial one without reviewing it closely.

However, if the change is made by a user you don't know, you will want to carefully compare the new version with the old to evaluate the change.

I have only encountered one case of wiki vandalism, in which the user clearly made malicious edits. Mediawiki has an extension called Nuke that allows you to mass delete a user's contributions. If someone is vandalizing the wiki, administrators can undo all edits from that user as well as block that user from making any more edits on the site.

Talk Pages

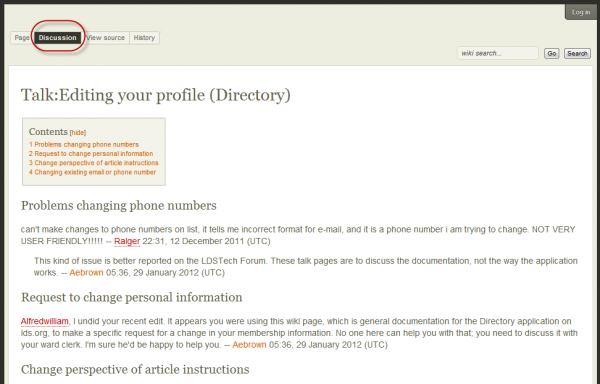

Many times readers don't want to make direct edits. Instead, they want to ask a question or make a comment about the content. As a companion to every page on Mediawiki sites, there's a Talk page where you can ask questions, raise issues, and otherwise carry on a discussion about the content on the page.

The Talk page functions similar to a comments section, but it allows users to carry on this conversation on an entirely separate page that won't elongate the original article.

The Talk pages aren't usually familiar to many mainstream users. Many more users are familiar with comments sections below the pages. But the Talk page is really more appropriate on a wiki, because you're not merely making a comment; often the Talk page reflects proposed edits, stated reasons for changes, or other analysis of the content from a contributor's perspective.

Sometimes the Talk pages are more interesting than the actual article pages, because it's on the Talk pages where users share their opinions in a more candid, straightforward way. Does the article seem biased? Does it need to be broken up? Do the assertions need references? Do the instructions not work?

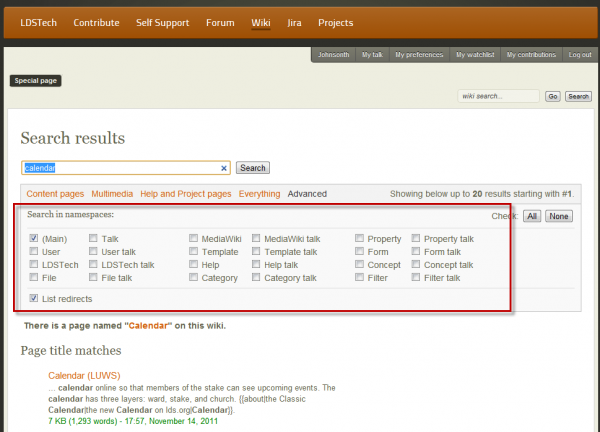

You may wonder whether all of this discussion clogs up the search results for the site. Not so. Mediawiki gets around this through the concept of spaces. Articles are in the Main namespace, which is the default space that searches look in. But if you select the Talk space check box on your search results, the results will expand to include Talk pages in the results as well. There are other spaces where content resides as well (a file space, a help space, and more).

Regardless of the idea of spaces, when Google indexes your site, Google completely ignores the separation of talk pages from article pages and mixes everything into one big list of results.

Everything on One Site

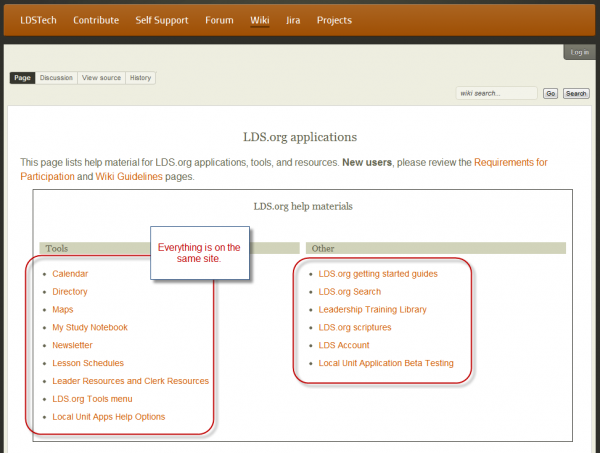

Although Mediawiki may separate content through the idea of spaces, most articles will still be in the Main namespace. This presents another shift in content organization that may be new to technical writers. With a traditional help authoring tool, such as Flare, you create a project for a software application and put all the help topics in that project. When you're done, you publish the help files for that application in its own webhelp output. Searches in the content are restricted to the single product contained in the webhelp.

Not so with a wiki. A wiki is a website, and just as you don't create a new website every time you need to publish a set of articles, you also do not create a separate wiki every time you want to add pages for a new product. In my organization's wiki, we have help materials for about least two dozen products on the same site. If you search for something generic, like “Editing settings,” good luck on finding anything in the results. At the very least, you must make sure you add the product name in your searches.

There are benefits to having everything on the same site. Let's say you're a developer and you want to stay on top of all the changes made for a lot of different products across the organization. By monitoring the Recent Changes section of a wiki, you can keep abreast of all the changes in a way that would be nearly impossible with a webhelp output from a help authoring tool. In this way, keeping everything together on the same site provides a unique advantage that other platforms can't offer: integrated awareness.

You can also cross-link one product to another more easily. If you have a lot of products all in the same family, having all the help material mixed together on the same site might be a good idea. You can help users find information about other products as they read information about one product.

However, having all product information on one site also has its downsides. The nature of wikis is to grow indefinitely. The more content you add, the more your search results get diluted. If it's unlikely that users interested in one product would search for another product, separating the content into different sites may provide better findability.

Another downside is that, if everything is one site, branding content for different products in Mediawiki is not possible. For example, if you have one category for Product A, you can't customize the wiki skin to match product A, yet make the skin look different for Product B. (You can, however, do this in Confluence.)

For some product managers, customizing the help's skin is important so that the user experience feels seamless between the application and help material. You can, of course, customize the overall Mediawiki skin. Just realize that customizing a wiki skin is not an easy task (at least with Mediawiki it isn't). I create a lot of custom WordPress sites. I'm familiar working with PHP code and CSS. But Mediawiki's code is harder to understand. It runs on PHP as well, but the PHP tags aren't as clear and well-defined as with WordPress.

I spent about a week creating a new skin for Mediawiki, because the upgrade from 1.15 to 1.19 completely broke the existing skin. We finally upgraded the skin, but had to involve some developers and interaction designers to work out a few kinks I couldn't figure out.

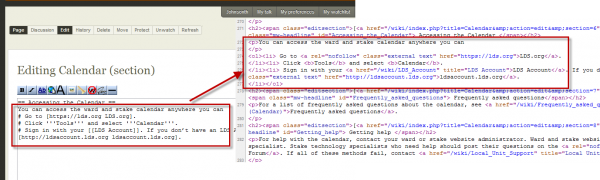

Wiki Syntax

Although wikis usually rely on standard CSS for their display in the browser, almost every wiki has its own custom syntax of asterisks, back ticks, brackets, and braces to format content. The idea is that wikis simplify publishing by not requiring users to know HTML. Instead of creating a bulleted list like this:

<ul>

<li>first item</li>

<li>second item</li>

<li>third item</li>

</ul>

You just create the list by adding

* first item

* second item

* third item

It gets pretty easy to do basic formatting with wiki syntax. Instead of coding a link as <a href="http://google.com">Google</a>, you simply write [google.com Google]. Tables are a little more complicated, but nothing too complex. (They involve pipes mixed with brackets.)

When you save the page, the wiki syntax dynamically converts to HTML so that the formatting renders correctly in the browser. Viewing the page in the browser, you could view and copy the source code, and you wouldn't see any of the wiki syntax. You would just see HTML.

Here's one more interesting characteristic of wikis: wiki syntax is nonstandard. The syntax for one wiki differs for the syntax with another wiki, so when you switch wikis, you have to learn the new wiki's world of syntax.

The idea of wiki syntax is to allow the masses to contribute, rather than limiting web publishing to the developers and techies. As such, wiki syntax attempts to be simple and learnable. But the instant you want a more sophisticated format and layout, wiki syntax can become more tricky than merely hand-coding. To get around this, sometimes wikis actually allow you to mix wiki syntax with HTML.

Translation

At least with Mediawiki, the custom syntax used on wikis poses some problems for translation. Usually help authoring tools will export content in an XML format that translation memory systems can process (exporting and then importing the XML). However, with Mediawiki, if you export all the content in a category, the export mixes XML with wiki syntax. This means the exported content will include syntax that includes * and # and ‘ and [] and {}. (With Confluence, I believe the export is pure XML.)

Now, Mediawiki may be a poor wiki to use for documentation. Mediawiki isn't meant for technical documentation but rather as an encyclopedia, and Wikipedia's translation strategy doesn't involve exporting the content to a translation system and then reimporting it.

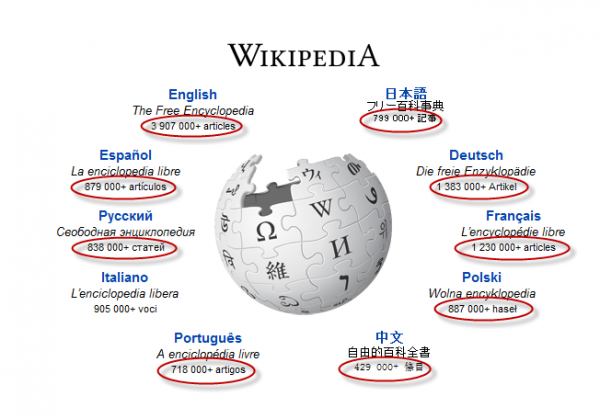

Instead, with Wikipedia, each translated site is an entirely different version. The English and Spanish and Portuguese Wikipedia sites do not have a commensurate number of pages. An article in Spanish may be half as long as the same entry in English, if the article exists at all. The translated sites are other members of a wiki family, rather than translated versions of the English wiki.

Not all wikis handle translation as wiki families. Actually, it's more common among Mediawiki sites to see a series of subpages showing the translated pages. For example, below the English Mediawiki page, you may see a list of other languages. The articles in the other languages would be translated, but not the overall wiki interface.

If translation is a strong requirement, you might want to think about a platform other than wikis. Even if you do manage to translate the wiki content, you run into several other issues after the translation. When users make edits in other languages, how will you review and evaluate the changes? You will likely need a language lead for each language you translate to. The language lead can evaluate the changes to decide whether they're beneficial or not.

Also, if you translate the template names and the category names, managing the wiki becomes more difficult. If you don't translate the template names or category names, you limit the ability of community users in other languages to manage and administer the wiki. But if you do translate all of these files, you impair your own ability to manage the wikis in other languages.

Finally, because wikis are meant for continuously evolving information, you will need to decide how often you propagate the changes through to the other languages. Will you immediately translate each and every change made on the English wiki to your other language wikis? Or will you make an effort to update the translations on a quarterly basis? Will it bother you to have some content that is out of sync in different languages? And what happens when you have some proactive community volunteers update some of the pages but not others -- how will you keep track of what has been updated and what hasn't? In sum, translation poses a substantial new layer to consider with wikis.

Content Re-use

Another common feature that technical writers need in publishing help material is the ability to re-use content. If you having a common series of steps that preface a lot of different tasks, such as how to access a Settings panel, you usually want to re-use this language so that you reduce translation costs. If you have 10 different variations in the way you say the steps, you end up translating 10 times the content. You also increase your own workload considerably. Content re-use can help you avoid this.

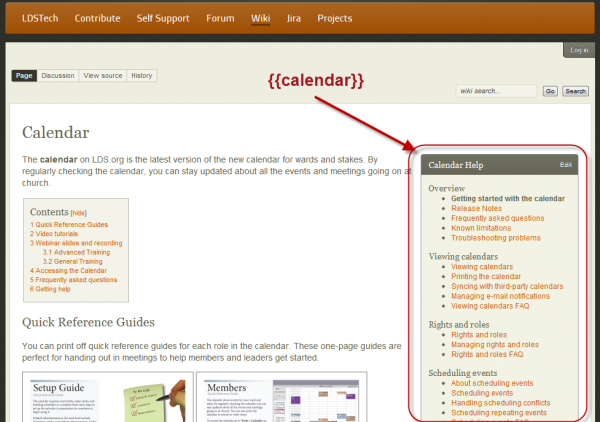

On Mediawiki, you can re-use content, but the content re-use features are not as easy as they are with a help authoring tool. With Mediawiki, the way you re-use content is through templates. You create a template, and then you insert that template into any page. You can change the template and your changes will be reflected in all the places the template is inserted. You can also see all the pages that the template is inserted in.

Templates are how you create sidebar navigation as well. By default, wikis are usually flat. They don't have the tree-like table of contents on the left (well, Confluence does; Mediawiki and many other wikis do not). As a result, if you want to provide an easy navigation system for users, you have to create a template, and then update that template each time you alter the navigation.

At best, the ability to re-use content on Mediawiki is somewhat rudimentary.

Managing Content

With a help authoring tool, you often manage your content in various folders and tools, or you have an administrative interface that you access to manage content. With Mediawiki, you don't have an administrative interface. The browser itself is the interface.

Let's say, for example, that you want to see all the templates that you've created. You search for special:templates in the wiki's search bar and the results show a list of the first 50 templates in your wiki. If you want to see all your categories, you search for special:categories. To see all pages, you can search for special:allpages.

You can manage content by doing a variety of queries like this. You can see the longest pages, shortest pages, orphaned pages, most popular pages, unused templates, unused categories, unwatched pages, a file list, and so on. You can run these queries because the wiki content is stored in a database. There's also a page on the wiki containing links to each of these queries.

Managing the content through the interface is a little uncomfortable if you don't know everything you can access. A lot of times I've run across a query (like special:ancientpages) and thought, hey, I didn't know I could do that. With a help authoring tool, you can often look at the various buttons and menus and browse what you don't know. But if you don't even see something that looks unfamiliar, it's less likely that you'll start exploring it.

That said, it's rewarding to be working so regularly in the web browser. One of the shortcomings of help authoring tools is their distance from the web. The outputs from help authoring tools often feel a bit like they belong in the pre-Internet era. Before Flare's HTML5 skin output, most webhelp skins from help authoring tools looked rather dated. With wikis, you're working in a browser and the display is entirely browser-based. Wiki sites look much more web-like.

Categories

Finally, I'll cover one last unique feature of wikis: categories. Categories aren't that unique, but Mediawiki implements them in a way that technical writers may find unfamiliar. At the most common level, you add all pages to a category that you want grouped together. With a help authoring tool, you would group similar pages into the same folder. However, with a wiki, because wikis are a flat system, without the nested hierarchy that you find in a table of contents, categories provide the default system of navigation.

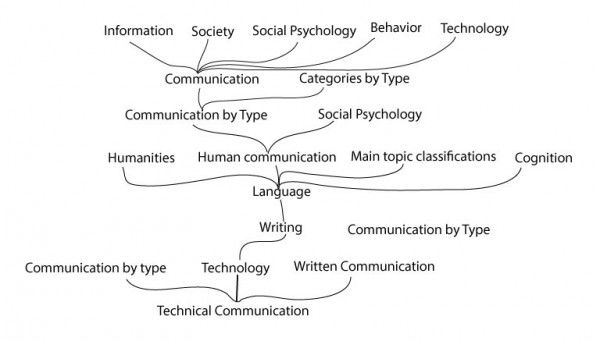

You can have categories and sub-categories and sub-subcategories. That may seem somewhat normal. But here's the interesting thing with Mediawiki: all categories must have a parent category that becomes more and more general until you reach the highest level of abstraction. The categories form a complete hierarchy of classification.

This category hierarchy can be somewhat amusing. For fun, visit any Wikipedia page, scroll to the bottom, and then start browsing up the parent categories. Here's the upward path when you start at the Technical Communication Wikipedia page.

You may have missed the category feature on Wikipedia because categories aren't that useful. In fact, if they're intended as a navigation aid, they're almost invisible, buried at the very bottom of the page. No doubt their demotion in the visual hierarchy of the page has something to do with their uselessness as a method for finding content.

Instead of using categories to navigate content, wikis often rely on cross-linking within topics. And this turns out to be one major advantage to wikis. Because all the content is on the same site, there are many more opportunities for cross-linking. Wikipedia has so many cross-links, or internal links, meaning links from one Wikipedia page to another, that all of these links add a tremendous SEO influence with Google's search engine. You can see this influence each time you search in Google. Wikipedia pages almost always rank near the top.

About Tom Johnson

I'm an API technical writer based in the Seattle area. On this blog, I write about topics related to technical writing and communication — such as software documentation, API documentation, AI, information architecture, content strategy, writing processes, plain language, tech comm careers, and more. Check out my API documentation course if you're looking for more info about documenting APIs. Or see my posts on AI and AI course section for more on the latest in AI and tech comm.

If you're a technical writer and want to keep on top of the latest trends in the tech comm, be sure to subscribe to email updates below. You can also learn more about me or contact me. Finally, note that the opinions I express on my blog are my own points of view, not that of my employer.