Reviewing draft DITA content with subject matter experts: 6 essential points

Lately I have been exploring different ways to let subject matter experts review DITA content. In a previous post, I explored using easyDITA to facilitate the review. In this post, I'll explore OxygenXML's Webhelp with Feedback output for conducting the reviews.

What makes for a good review process?

By far the best review method I've ever used was at Badgeville with Google Docs. Because all employees used Google Docs for their business suite of tools, using Google Docs to review technical material made for a perfect fit. Lots of engineers and product managers reviewed content frequently, abundantly, and in a timely manner through Google Docs. The best review process, I'm certain, is to implement what works in your organization.

However, working with DITA creates some challenges. You can't exactly push your DITA content into Google Docs for the purposes of review and then pull it back into your XML editor because there's almost no integration between these two platforms. Even if you could, your company might not allow you to store your content on Google Docs.

1. Close resemblance to the actual output

While Google Docs had good interactive annotation capabilities that enabled parallel workflows and reviewer-to-reviewer conversations, it didn't reflect how the help would really appear. And that's an important aspect of a review process: reviewers should be able to get a sense of what the help will really look like. The closer you can approximate the actual published format, the better.

This is where OxygenXML's Webhelp with Feedback really shines. If Oxygen's webhelp format is your output, the comment capability below each topic allows reviewers to get an exact sense of what the help will look like -- the hierarchy of the TOC, the display of content, the conditional profiling, and more.

Review processes that involve outputting the content to PDF, Word, or some other format that is easier to annotate but fails to reflect what the help output will really look like might lead the reviewer to misunderstand the organization and semantic relationships between the help topics.

2. Continuous iterations of the review

Another key feature of the review process is being able to update the material continuously throughout the review process. Since not everyone reviews the material at the same time, if Joe gives feedback that you incorporate into the help before Sally reviews the help, you've just iterated the review process to increase the reviewing efficiency. Now when Sally reviews it, she's reviewing version 2.

If you incorporate Sally's comments before Mary reviews it, Mary is essentially reviewing version 3.0. This means your help has gone through three iterations during the review cycle, which is much more efficient than sending out the help content for a single review. It's more efficient because now your revisions also undergo reviews. In the single-time-review paradigm, unless you send out your revisions for review, you may never know if your revision actually matches the reviewer's recommendations.

To facilitate iterative review processes, you need to have a central source that you can continuously update. If people have to download files locally to their drive, you won't be able to update the source without sending out an email to all your reviewers containing the updated copy. If you send out new version after version to a group of reviewers, they will quickly tire of your emails and probably hold off on all reviews while they wait for the content to finalize.

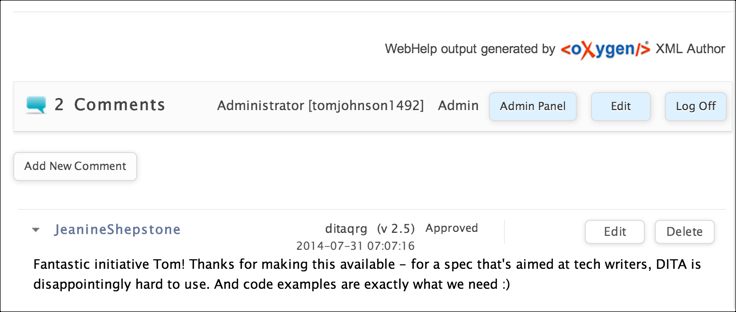

Oxygen's Webhelp with Feedback output allows you to publish new versions of the content without erasing the comments on the previous versions. As long as the topic's ID remains the same, the previous comment history remains associated with each new version of the content.

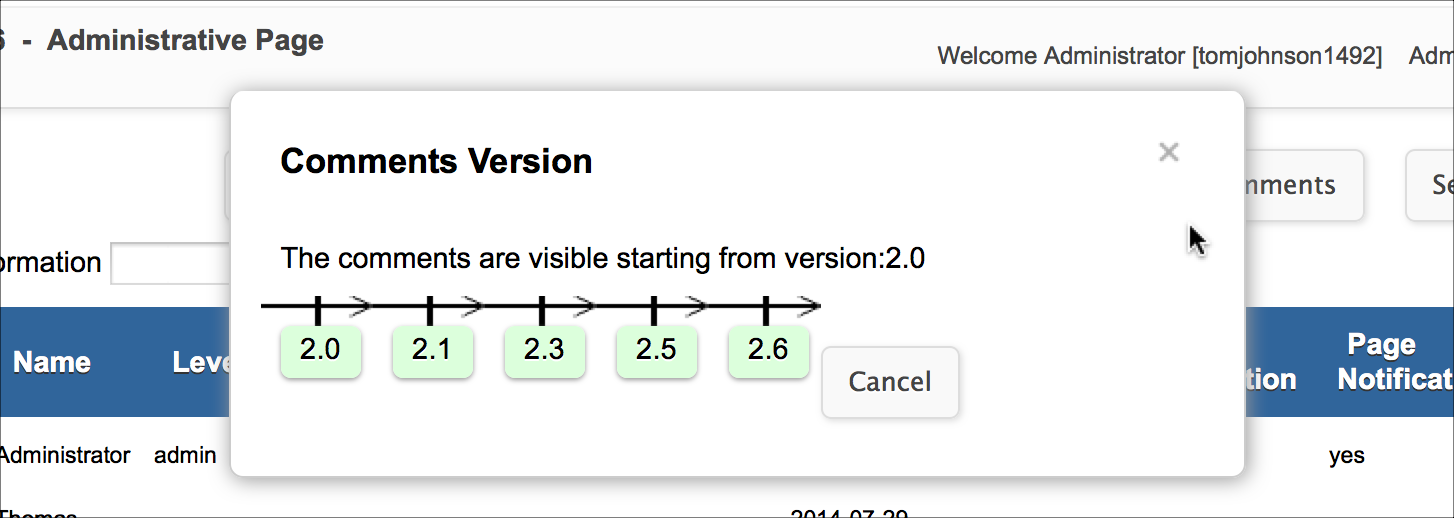

You can also indicate that comments associated with previous versions of the help be hidden. For example, if you have a lot of comments around a feature that you fixed, you can push out a new version (e.g., 2.0) and indicate that comments should only appear starting at version 2.0 and forward.

3. Conversations between reviewers

Another feature that's important during the review process is to enable reviewer-to-reviewer conversations. Sometimes engineers and product managers disagree, and it's much better politically to let the rams who want to butt heads do the butting against each other rather than you.

It's also hard to win arguments with engineers, who usually have a complex understanding of the technical details of a situation. I find it much better to facilitate conversations among divergent parties so they can talk to each other during times of disagreement. I like to let them fight it out amongst themselves (if it comes to that).

Oxygen's comments allow reviewers to reply to each other, but more importantly they simply allow all reviewers to see the thread of comments for a particular topic. In contrast to comments that are given in a 1:1 situation, comments from the group visible to the entire group do a much better job in letting every reviewer know where disagreements exist, who holds what opinion, and why.

If you update a topic based on a review and another reviewer dislikes the update, you have a "paper trail" showing why you made the change.

4. Support for asynchronous review cycles

Some people tell me that they review content by getting every reviewer together in the same room and going through the material topic by topic. While that method sounds ideal, I think it isn't very practical.

First, scheduling a time when everyone is available is difficult. You may get several but not all reviewers.

Second, there may be many remote reviewers, such as field engineers or support agents who should be included in the review. They may not be as present and visible as the developers around you, but their feedback is perhaps more important to gather.

Third, it's often difficult for all people to review content at the same pace. If you have a lot of code snippets, it's not feasible for everyone to look through the content at the same level of detail in the same time. One person may speed through while another prefers to study it line by line.

Fourth, reviewing all at once misses out on the iterative review possibilities. It gives the sense of finishing all the review in one go. I doubt you would be able to round up the reviewers to review your revisions a second or third time.

Finally, discussions during doc reviews often get derailed on tangents. Developers may devolve into a discussion about ways to improve confusing interfaces (a topic surfaced by confusing documentation). Moving toward interface simplicity is good, and finding more efficient ways of improving the application are always welcome, but these discussions don't accomplish the immediate purpose of the doc review meeting. You end up with a lot of discussion but not always focused on the documentation.

Further, many times discussions may seem clear to developers, but the points may not be clear to the writer. Developers revel in jargon and may quickly reference more advanced concepts, assuming that you get their point from a few mentions about this and that technique. In reality, the tech writer often needs more concrete explanations and accurate outcomes of discussions. In this case, having the information written down is more helpful.

5. Easy navigation among the help topics

Reviewers should be able to navigate the help's TOC and go towards the topics that they want to especially review, especially if they worked on certain features. One thing I disliked about easyDITA's reviewing system is their ticketing paradigm. With easyDITA, when you're done with a topic, you create a ticket for someone to review the topic and send that ticket to the reviewer. When the reviewer completes his or her review of the topic, you get a note back saying the topic has been reviewed. You can then approve the ticket.

The problem is that it's very tedious to pick out the topics that people should review and issue tickets for them. Even though you can include multiple topics in one ticket, different reviewers will have different specializations. You may not always know who the right person to review a topic should really be. It's better to let the reviewers navigate the table of contents themselves so they can naturally gravitate towards the topics they feel compelled to review. (In easyDITA, you can also send a reviewer a ditamap that leads to the TOC in easyDITA -- it's just not as immediately intuitive for the reviewer.)

With Oxygen's Webhelp with Feedback output, you can allow people to browse the help and find what they want to review. Of course this could backfire as well. Without a specific direction about what to review, the reviewer may not review anything at all, especially if your help is so massive as to be overwhelming.

6. Display of other reviewers' comments

Finally, a good review system should let other reviewers see the history of discussion and review for a particular topic. As a reviewer, I would be interested to know how others have commented on topics. Comments from other reviewers may spur new thoughts and feedback from me that might otherwise lie dormant without seeing the discussion.

Additionally, seeing the discussion lets reviewers know that others have weighed in on a topic and given the technical writer feedback. This reduces the time that reviewers spend in reviewing material. If others have already provided feedback on a topic, there's little point in supplying the same information.

A redundant review process would have all reviewers provide feedback in isolation, repeating much of the same information that other reviewers have already submitted. By providing comments below topics, reviewers can quickly glance and see if the appropriate feedback has already been submitted about the topic and then move on. This reduces the strain on reviewers to get through the material.

Getting started with OxygenXML's Webhelp with Feedback

Most of this post involves strategic exploration about the best methods for technical review and shows how OxygenXML's Webhelp with Feedback output might provide a win for each of these points. But I haven't yet used OxygenXML's Webhelp with Feedback in an actual reviewing situation. To be honest, I installed it just yesterday in preparation for an upcoming review, so I hope to follow up this post with a "Part 2" at a future date.

For instructions on installing and using OxygenXML's Webhelp with Feedback, see the notes in my DITA QRG here: Webhelp with Feedback.

See also my page on Handling SME Review in my DITA QRG.

About Tom Johnson

I'm an API technical writer based in the Seattle area. On this blog, I write about topics related to technical writing and communication — such as software documentation, API documentation, AI, information architecture, content strategy, writing processes, plain language, tech comm careers, and more. Check out my API documentation course if you're looking for more info about documenting APIs. Or see my posts on AI and AI course section for more on the latest in AI and tech comm.

If you're a technical writer and want to keep on top of the latest trends in the tech comm, be sure to subscribe to email updates below. You can also learn more about me or contact me. Finally, note that the opinions I express on my blog are my own points of view, not that of my employer.