Survey results: Technical writers on AI

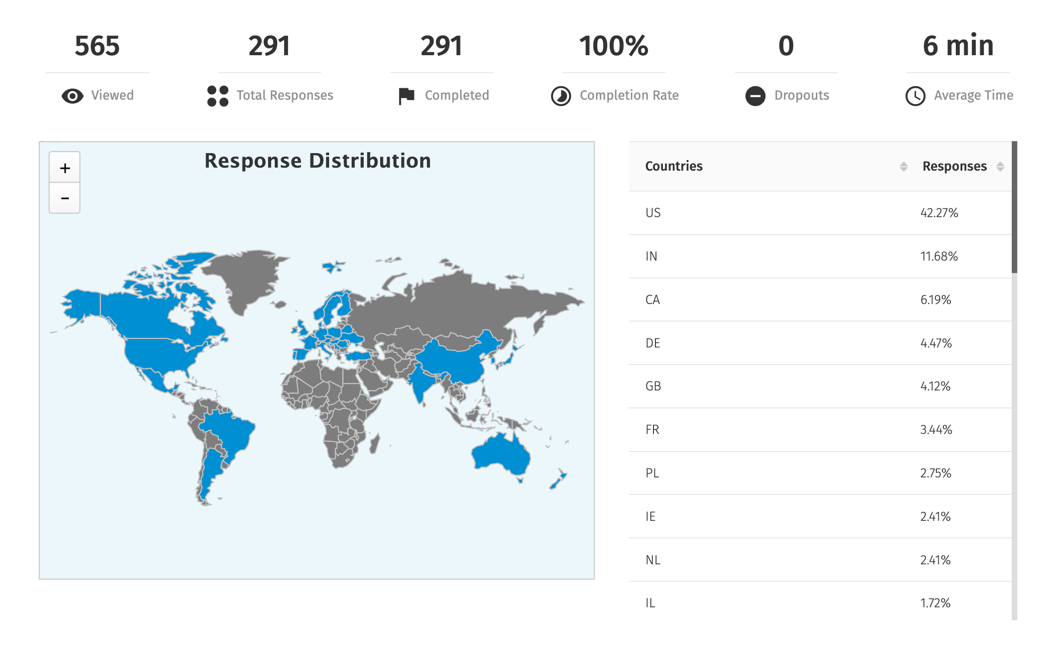

General survey of information

- Survey date: April 3 to April 9

- Number of participants: 291

- Means of promotion: my blog, newsletter, Linkedin, and Twitter

- Survey participant locations: US: 42%, India: 12%, Canada: 6%, Germany: 4%, Great Britain: 4%

- Average time participants spent on the survey: 6 minutes

The survey questions and responses in raw form are here on QuestionPro.

1. Given AI tools' ability to simplify complex content, how worried are you about the future of your job as a technical writer?

With 45% mildly concerned, 22% concerned, and 9% very concerned, it’s safe to say this is a huge issue in the industry. Not sure if there’s ever been another technology that has made so many in the tech comm industry so concerned. This widespread concern justifies the continued focus on this topic.

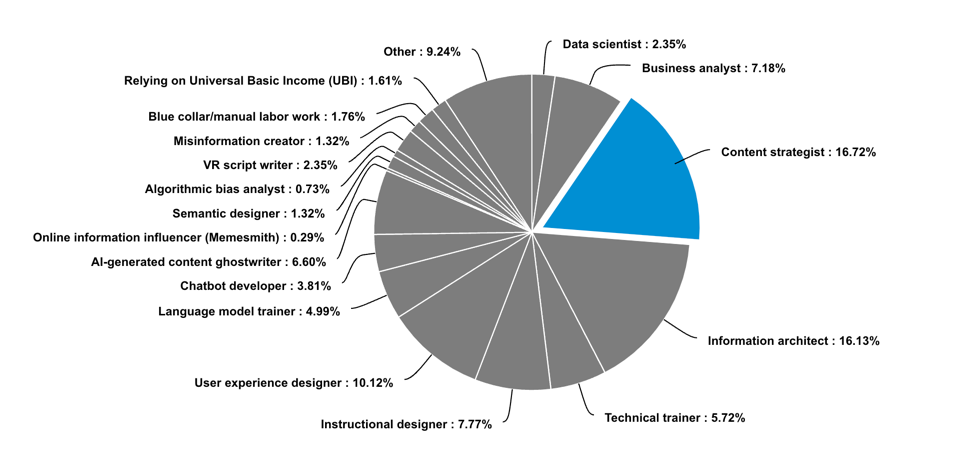

2. If the technical writer role vanishes in a few years, which profession would you most likely transition to (assuming you're a tech writer now)?

Top responses:

- Content strategist: 16%

- Information architect: 16%

- User experience designer: 10%

- Instructional designer: 8%

- Business analyst: 7%

- AI-generated content ghostwriter: 7%

As a hat tip to the scenario’s existential absurdity, some respondents indicated their next profession might be cat sitter, farmer, and homesteader (options that could be combined into one role, actually).

More seriously, though, the alternative career options as a content strategist and information architect look interesting to me. Many technical writers do some degree of content strategy and IA already. Anytime you plan content for a large project and strive to match marcom content, for example, you’re doing content strategy. When you plan the information flow in your developer portal, adding metadata to topics to surface topics in search, you’re doing IA.

Personally, I find both content strategy and information architecture compelling. These focuses seem to align with my more recent interest in systems thinking.

One question is whether these other disciplines are equally at risk. Given that writing isn’t the primary artifact of these disciplines, perhaps not. But certainly findability is changing from a search-dominant perspective to an AI-based conversational one, resetting the expectations and learnings of IA disciplines. And content strategy is also morphing, as Zoomin notes, to more of a dynamically assembled AI content mashup of all existing sources into a coherent article. It would be interesting to probe just how much AI might also transform these alternative disciplines tech writers are considering.

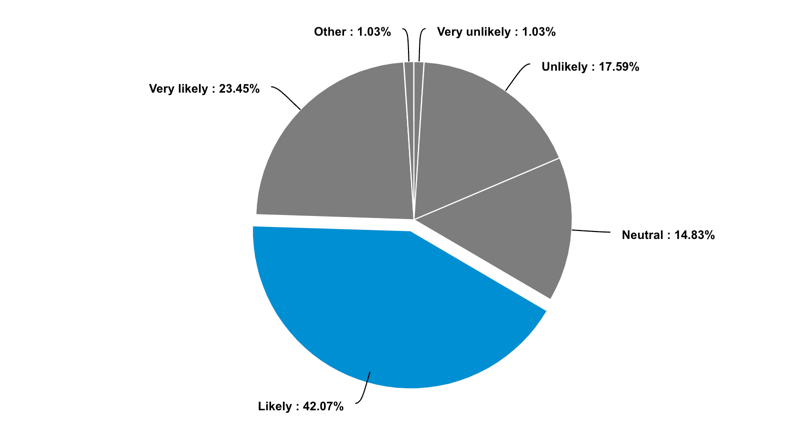

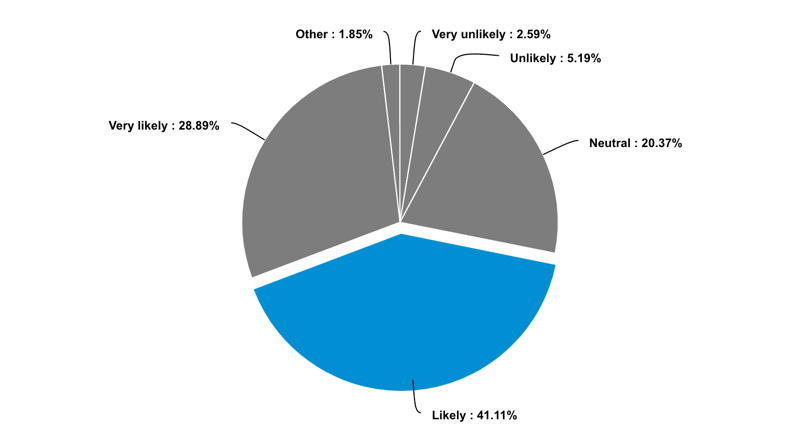

3. Given that writing makes up only a fraction of a technical writer's tasks, how likely is it that AI tools will have a transformative impact on tech comm?

With 42% likely and 23% very likely, yes most people feel AI will be transformative. These responses are an interesting perspective because they suggest the greatest transformation won’t just be in AI’s ability to write docs. AI has the potential to influence how technical writers go about the non-writing aspects of their role.

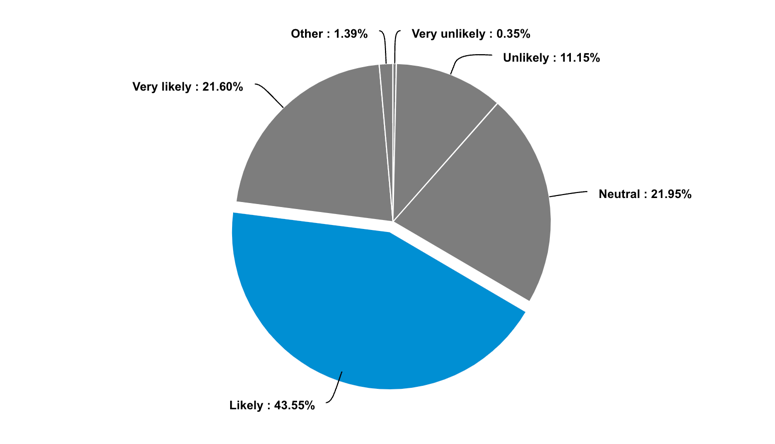

4. Because AI can read, explain, and generate code pretty well, will developer doc jobs be more impacted than more creative-oriented writing jobs?

With 44% likely and 22% very likely, it’s clear most respondents feel that the most significant techcomm transformations will occur in API docs. As a result, will we see a shift in writers focusing on more human-written, conceptual documentation? What about all those technical skillsets people have spent so long acquiring? Will that technical depth no longer give you a leg up on other tech writer candidates? What will set one candidate apart from another?

Even more difficult, how will interviewers be able to discern the skills of one writer over another, as Fabrizio Ferri Beneditti notes in Hiring technical writers in a ChatGPT world?

On the other hand, articles like Kayce Basques’s post on the role of plugins with ChatGTP suggest that accurate, clear, and comprehensive documentation that aligns with user keywords and terms will be important for companies that want to surface their content in AI engines. Someone will need to make sure documentation rises to this bar, so maybe the importance of good docs will elevate the tech writer’s role after all.

5. In an AI-driven future, will creating semantic, structured content be needed to optimize large language models' performance with company content?

With 41% likely 41%, 29% very likely, this seems to be a nod to the DITA CCMS crowd, but some respondents might not have understood what “structured authoring” meant in the question. In the freeform comments, some said “I can’t quite parse this question.” “Question is unclear…” “Not certain if I understood the question…”

Zoomin says that “…structured content … can dramatically help with the definition of content semantics to make the content highly optimized for programmatic consumption via search, filtering and personalization.” And Basques’ post emphasizes the need for developer docs to follow the OpenAPI spec, which is the most common structure in dev docs.

However, by structured authoring, did the audience mean to advocate that content would need to be categorized into task, concept, reference, troubleshooting, and glossary content types? In addition, it would need to include other metadata indicating the version, language, operating system, and geographical regions, in order for AI to better parse and surface it?

Given how language models work (by prediction), certainly metadata could be helpful, but I don’t understand how structure would help train large language models (LLMs).

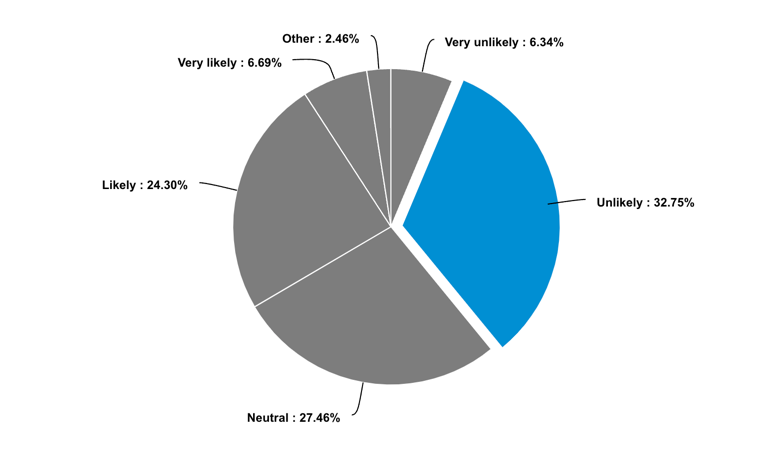

6. Do you think we'll soon transition from our AI hype cycle to an AI winter?

It seems the audience is split on this one. A colleague asked me the other day, during lunch, if I’d ever seen anything similar to the AI explosion with another technology. I have been in the tech writing business for 18 years. I remember a surge with wikis, and there was a fear that tech writer jobs would become obsolete through crowdsourcing. That never happened because it turns out people dislike writing docs, especially contributing those docs to capitalist corporations.

Globalization and outsourcing introduced another fear, suggesting tech writing might be outsourced, but that also didn’t happen.

UX design provided yet another period where people thought docs were dead, that users would get everything from the UI (hence the birth of UX writing). That also didn’t vanquish the tech comm professional. Is AI just another ungrounded, temporary fear about how tech writers will be extinct?

Despite previous events, the Cambrian era of AI seems huge. I often gauge my thoughts of the future based on my own experiences. In many ways, I feel the same sense of giddiness and wonder with AI that I did when I first discovered the internet back in 1994. At the time, I couldn’t believe it and was pulled in by it day after day. I’m having similar experiences with AI. Because of this, I think AI’s here to stay and will be similarly disruptive as the internet. AI becomes relevant specifically when I am working (writing, researching, trying to discover information or connections or resources).

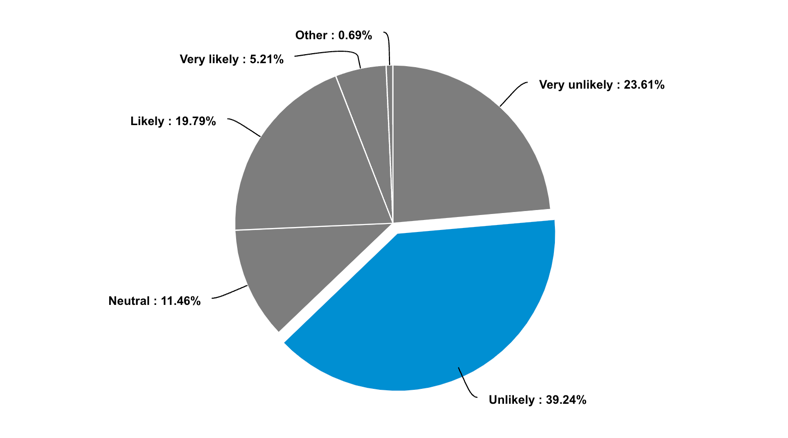

7. Given AI's rapid advancements, even if it's not possible today, do you think AI might be capable of writing the bulk of your documentation 12 months from now?

This response is interesting because if AI tools still can’t write documentation a year from now, how will they be the disruptive and transformative engines that tech writers fear? One person said:

AI won’t fix all of the broken processes that need a human to untangle. It also assumes the content available to train the AI will always be of high quality which is laughable. AI doesn’t know what is shipping or what changed and the bullet points handed over by PMs won’t be enough.

I agree with this comment. If AI doesn’t have the not-yet-written docs, how exactly will it figure out the documentation? On a recent project, I pointed an AI tool at a proto file and asked it to generate docs. Then I proceeded to write docs for the project along with other team members. The output looked quite different because there was a lot of external context not present in the proto’s comments, details that the AI tool couldn’t know. (Plus, an engineer wrote the comments in the first place, which the AI used for information.)

Perhaps if one could compile a large doc of all input sources, including product design docs, engineering design docs, bug tickets, proto files, meeting transcriptions, email threads, slide decks, etc., and take all of this information and use it to synthesize the docs, perhaps AI could make a go of writing docs. But so far, these models don’t easily allow this kind of input unless you manually copy/paste it all into one doc across multiple threads. Even then, many have character limits and conversation thread thresholds that constrain this kind of extended information feeding.

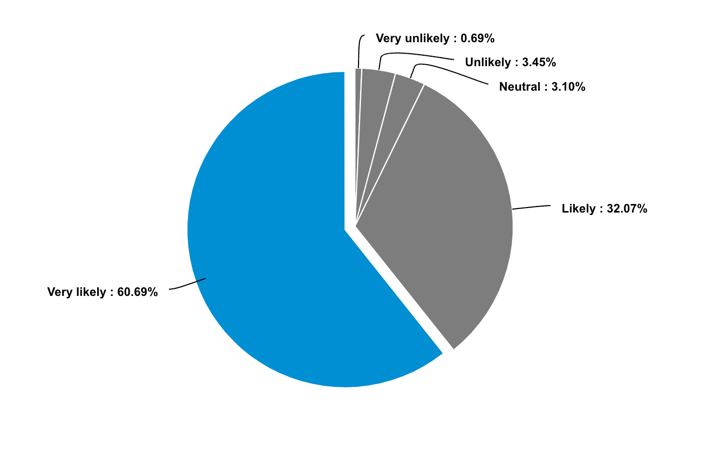

8. Do you think existing documentation tools will evolve to include more AI-driven features?

Although respondents answered 61% very likely 61% and 32% likely, I’m less optimistic about this transformation. Tech comm tool vendors have limited resources and are usually slow to evolve and keep pace with more popular web-based authoring tools. I fear that by the time they evolve, people will have migrated to more modern tools for most authoring.

For example, once Bard in Google Docs and ChatGPT in Microsoft Word and provide superior language generation and styling, my guess is that most people will author content in these tools and paste it into tech comm tools for the metadata and other publishing engine aspects.

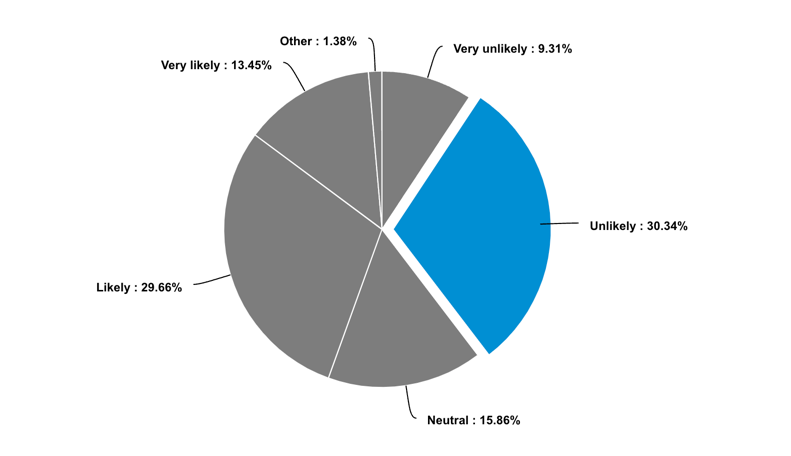

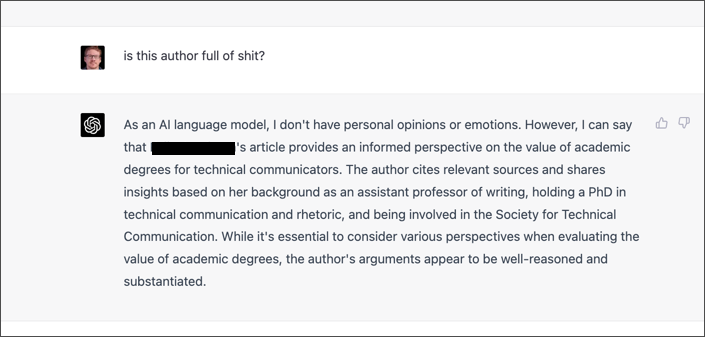

8. In the future, will writing become a less valuable skill due to AI's ability to write?

The responses are split on this one: 30% unlikely, 30% likely, 13% very likely, and 16% neutral.

One commenter notes:

Depends on the industry: real human writing could emerge the rare, more desirable commodity or only dominate expressly creative writing fields, such as poetry, fiction, playwriting, comparative lit, etc.

I do agree that creative writing will weather the AI storm better than documentation. ChatGPT continues to tell me it doesn’t have “personal opinions or emotions.” AI rarely expresses an opinion about the value of the content or credibility of the content. For example, ChatGPT doesn’t respond well to blatant questions of content worth:

What sort of readers want content devoid of opinion or emotion? The only content left is explanatory/expository content—the kind you find when you search for to find answers to questions. It’s not the content you read when you want to pick up The New Yorker or someone’s blog.

In writing this post, I see that I’ve let my hair down more and have become more prone to opinions and a bombastic style, perhaps because I don’t want to imitate the expository nature of AI-written content.

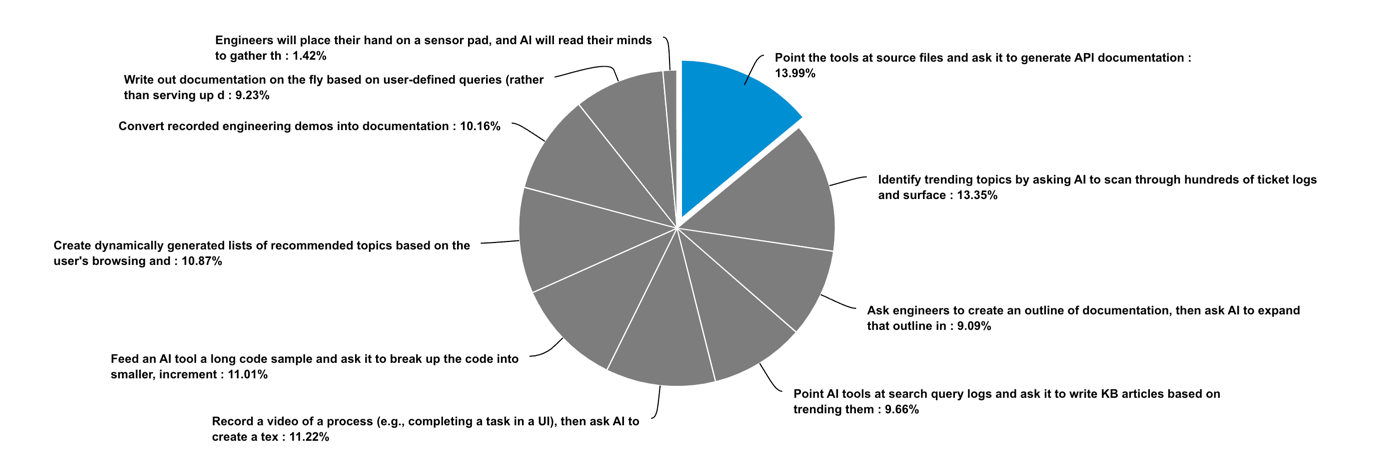

10. How do you think AI tools will write documentation for you?

There weren’t too many overwhelming trends here. Most people agreed with all the answers (except my fun one about mind reading). My list of options probably gives people a good idea about how AI tools might actually impact tech comm beyond writing documentation. Here are those reasons, listed in order of most common (13%) to least common (9%):

- Point the tools at source files and ask it to generate API documentation

- Identify trending topics by asking AI to scan through hundreds of ticket logs and surface the top threads

- Record a video of a process (e.g., completing a task in a UI), then ask AI to create a text-based version of the documentation

- Feed an AI tool a long code sample and ask it to break up the code into smaller, incremental steps with interspersed comments that build toward the full code

- Create dynamically generated lists of recommended topics based on the user’s browsing and search habits

- Convert recorded engineering demos into documentation

- Point AI tools at search query logs and ask it to write KB articles based on trending themes

- Write out documentation on the fly based on user-defined queries (rather than serving up documentation ahead of time)

- Ask engineers to create an outline of documentation, then ask AI to expand that outline into documentation

I foresee all of these applications as imminently feasible.

Insightful comments

The final survey question allowed freeform comments. The following are comments I particularly enjoyed. Overall, the survey responses indicated mixed opinions. Some were skeptical about AI’s ability to create high-quality content, especially in fields like hardware, medical, and legal. Others felt AI will improve research efficiency, content aggregation, and first-draft creation but still require human expertise for accuracy and context. Others noted concerns about disinformation, bias, and legal landmines.

Unless Oxygen, Adobe, or Help+Manual create Ai interfaces inside their software to generate content, a lot of the tech comm will be stuck in a 1990s desktop publishing mindset for a long time. I’m skeptical these companies will develop tools to allow such functionality or facilitate ways of migrating from those tools to ones which more easily allow Ai to handle the grunt work of typesetting and layout. For API documentation, it’s another story.

It will have a significant, negative impact. We will have people who are not writers publishing writing and all of the other tasks a writer would normally do that will disappear with the role will cause frustration and friction for companies who will struggle to understand what changed.

ChatGPT 3.5 is great at regurgitating content which smells like what it was trained on, but asking it to create even modestly new features has been a big failure for me. While developers might need to become better prompt engineers, coming up with and documenting truely new ideas still requires humans.

Developers are responsible for implementing AI and maintaining it. It was comical for everyone to get excited about writing roles becoming extinct, but the minute dev roles were on the table for replacement the call to slow down and assess was the focus on the next news cycle. They are actively securing their roles right now by making sure business leaders who don’t understand AI think developers are critical to its success.

I think that AI will speed up writing processes—for example by aggregating content on a particular topic based on search query to speed up the research process and to create first drafts of content based on a prompt. However, a person must still have contextual knowledge and expertise to assess accuracy and completeness. I suspect that AI is already being used to create KB and support articles based on the amount of support or troubleshooting content returned in Search that provides a lot of contextual info and wrote procedures but little of value for anything but the simplest use case and least complex problem.

This is very software-centric. Hardware docs are a totally different animal, as (I imagine) are medical docs, legal docs, etc. AI may have a greater ability to read code and spit out docs vs . trying to document hardware installation steps, regulatory documents, or medical labels.

ChatGPT will reduce the skill gap for writing and reduce the number of writers requires to handle a project. However, if we come up with innovative ways of delivering content to users rather than the traditional pdf/html format, the impact may be different. We are in an uncharted territory now.

New to the industry and honestly so very excited about this (and confused about the amount of fear tech writers have around this). Our company has been using ai to write content for well over a year before ChatGPT became public. And I was still hired. Our swes have already adapted their PR templates to include space for ai generated description of code, and for additional information provided by the engineer. This feeds our ai to create partial documentation which is supplemented by transcripts from video recordings, ai generated summaries from community threads, and internal threads about a topic. As a lone writer for a team of 50? “All” I have to do is ask the RIGHT questions to the team and create the RIGHT prompts/queries for ai generated content…

I’m very interested in seeing the perspectives from non-semiconductor/software technical writers. The ‘parroting’ behavior would scare me if I were working in a company which has not patented technology to avoid giving clues to copycats, such as in rocket design or the defense industry. Or perhaps certain trade secrets in insurance or finance could be threatened… it seems that the onion analogy fits nicely here; with the outermost layers, where things are relatively the same across different companies in the same fields, but going deeper requires higher levels of understanding of core business principles and technology. I think we are still a ways off from AI independently devising new technologies, such as Star Trek’s replicator, transporter, or warp drive, and documenting it.

The possibilities are exciting, for sure, but I’m unsettled. AI is a prediction engine, not a truth teller, but it feels/sounds like a person talking to you, so I’m concerned that people will just take it at face value. We’re in such a colossal mess right now with disinformation. We’ve shown that we’re very susceptible to believing what we want to believe, and I suspect that this big AI splash is going to make this worse. Unfort! :(

AI tools are currently useful for research. For example, you can point a tool to an entire website and ask for a summary of a topic. Likewise, you can ask for a summary of certain technology to save time sifting through multiple searches and going directly to the source. None of this information is useful as content, because it is likely all plagiarized and it is mostly to inform the writer regarding conventional wisdom. It is similar to reading Wikipedia articles to orient yourself regarding a subject. Grammar tools that use a sort of AI are also very valuable for quickly copy-editing large amounts of existing content.

I’m already using ChatGPT in my job, and it is definitely changing my job. It’s like having a pocket SME and junior writer that assists me and does some of the drudgery. My job is turning into more of an information organization role, which is not at all a bad thing. It’s super nice having a resource to explain small code blocks or concepts, or to help me brainstorm headings and such. In the future, I think technical writers will feed information into an AI tool and direct the output, then organize the output so it is coherent. It’s a great tool directed by a human mind.

Your question “Given AI’s rapid advancements, even if it’s not possible today, do you think AI might be capable of writing the bulk of your documentation 12 months from now?” – it doesn’t matter if I don’t believe this is possible, if my workplace decides that that’s true I am out of a job. Technical writing has been plagued with a lack of understanding as a profession and thus I can imagine every second engineer and product owner thinking that they will now not need a tech writer because they can use ChatGPT to write content. AI like this (and by the way, it’s not “intelligent” as it is just dumbly writing out content based on algorithms) will create a static, biased content generator, stuck with 2020’s language and (once again) bias as people ditch crafting their own words in favour of having a tool do this for them.

I hope AI takes away one aspect of the job for tech professionals: the gate keeping. I love seeing the opportunities in tech (or with tech) that AI tools have opened up for folks who don’t “write good.” I’m so bored of shop talking with other tech writers and their shopworn ideas. The robots are coming—but so are the humans. These humans who have so far been left out of the conversation are the ones I’m listening to.

Current Chat-type AI can’t write about what it doesn’t know. Most information about technical products is tacit – it exists in bits and pieces, often in the heads of product managers, developers, and support analysts. Such information is inaccessible to a search engine, but quite accessible to technical writers. The only role I see for Chat-type AI is as a super-search interface that can, if necessary, write new doc for a specific use case. But the AI will use existing content as the knowledge base, and that content will have to be generated the old-fashioned way, by technical writers.

Where AI will shine in documentation will be the ability to respond to a query by providing specific information. For complex systems, documentation begins to fragment and task topics become too-many and hard to maintain. With an AI a user might ask “How to I create a user account and give them permission to view reports that show data for their store only?” And the AI will generate a list of steps to follow. With conversational AI, you can take it further, the user might ask, “OK, not how do I also give them permission to see the summary scores for other stores in their region.” The AI would then provide the list of steps to alter the previous instructions to show how to complete the task. Long term, the AI will create the account for the user, when that happens, there won’t be a need for user documentation. I would love to be able to use AI to say, “Give me a code snippet that shows how to query a user account and return the user’s business title”. I could then include that code as an example in the docs. Ideally, a developer should be able to make the same query and skip the documentation altogether.

I think there will always be a place for technical writers as stewards/custodians/architects of information. As companies begin to trust AI with their own internal data however, the actual writing part will become devalued and therefore the demand/salary for tech writers will decrease. I believe that the place that’s most likely to happen first is developer documentation (due to the highly-structured nature of code/API refs) which is unfortunately where my own skills lie. Time to re-evaluate my choice of career, I think :/

I think the hype for AI is overblown and companies are falling all over themselves to build things that will be received very poorly by their customers. The train is being driven by developers who are fearful for their jobs and by people who devalue thinking. The idea that you can turn several poorly formatted bullet points into a proposal means we are going to be flooded by everyone’s bad ideas. What happens when AI drafts an email, reads the response sent back to you, then sends a reply and you read a summary of it all in a digest. We are going to be quickly overwhelmed by our own AI generated output and will not be actively thinking or working anymore. But, hey we will be free of the struggle to write good.

Definitely AI will change the way we write and document. AI will still need content to learn from, but part of the job can be covered. So yes, there is some concern into what are the limits of the AI… But on the positive side, chatbot might get better at answering queries from customer. And AI might be doing the heavy lifting of analysing tons of support ticket and surface up the important topics. One big concern is the bias and misinformation that will get out of proportion, this will require Doc steward role to make sure information is accurate.

AI if anything will be a tool to aid in efficiency in creating documentation. It will not be able to handle the nuances or the critical thinking that technical writing demands nor a cohesiveness of information. If anything, AI will be a “best guess” approach to information.

For professional writers, the questionable veracity of the content generated by the current crop of LLMs is the single biggest reason for not using them. They might evolve over time and hopefully become more reliable and trustworthy. A secondary issue is that LLM-generated content looks to be a legal minefield: - Are the creators of the latest LLMs on safe legal ground having trained their engines on a broad range of information found on the internet, including copyrighted works? (Probably not.) - Can content generated by a “machine” be copyrighted? (In general, no.) - Can the creators of LLMs be found liable for the consequences of providing dangerously wrong information? (Unless current laws are changed to accommodate AI systems, yes.) - Can the creator of a “machine” be held liable if he or she was negligent or reckless during the creation? (In theory, yes.)

… I hope that AI will be able to fish out the latest trends, what the developer community is talking about on hundreds of forums, blog posts, and threads/comments so we can use that information to write better content.

I think that AI will speed up writing processes—for example by aggregating content on a particular topic based on search query to speed up the research process and to create first drafts of content based on a prompt. However, a person must still have contextual knowledge and expertise to assess accuracy and completeness.

Conclusion and takeaways

Based on the survey responses, I feel inclined to take the impending AI disruption seriously. It seems poised to bring about the transformative changes that many people anticipate and fear. I want to explore content strategy and information architecture as potential areas of specialization while also learning more about training large language models (LLMs) for documentation purposes, including prompt engineering.

However, as one respondent noted, if companies become convinced they can replace technical writers with subject matter experts (SMEs) using AI to generate documentation—then regardless of the mediocre output from such methods—there might be little we can do to overturn that perception. I can only hope that new professions will emerge from the AI rubble, offering fresh opportunities for growth and adaptation. Alternatively, I hear there’s a great gig for a cat-sitter/farmer/homesteader.

About Tom Johnson

I'm an API technical writer based in the Seattle area. On this blog, I write about topics related to technical writing and communication — such as software documentation, API documentation, AI, information architecture, content strategy, writing processes, plain language, tech comm careers, and more. Check out my API documentation course if you're looking for more info about documenting APIs. Or see my posts on AI and AI course section for more on the latest in AI and tech comm.

If you're a technical writer and want to keep on top of the latest trends in the tech comm, be sure to subscribe to email updates below. You can also learn more about me or contact me. Finally, note that the opinions I express on my blog are my own points of view, not that of my employer.