This is a recording of our AI Book Club session discussing Kai-Fu Lee's AI Superpowers: China, Silicon Valley, and the New World Order. There are 4 people in this book club discussion, and our conversation focuses on the emerging AI duopoly between the US and China. We share our own US-centric blind spots and weigh the political and cultural implications of China potentially winning the AI race. We also talk about Kai-Fu Lee's prediction of mass job displacement and his proposed social investment stipend, questioning both its feasibility and its potential drawbacks. The discussion also explores how our own professional roles, particularly in tech comm, might evolve to manage and direct AI rather than be replaced by it, and how surprisingly relevant the book feels today despite being published in 2018.

In the pursuit of bug zero, it helps to identify different types of bugs and the right strategies for dealing with each of them. In this post, I explore four common types of complex bugs I've encountered: Russian-doll bugs, scope-creep bugs, non-actionable bugs, and wrong-owner bugs.

I recently appeared on the Coffee and Content episode, hosted by Scott Abel, with another guest, Fabrizio Ferri-Benedetti, who writes a blog at Passo (passo.uno). The episode theme comes from a post Fabrizio wrote titled What's wrong with AI-generated docs, but the episode didn't focus exclusively on AI's problems and gotchas so much as AI strategies with documentation in general. This post provides a recording and transcript of the episode.

This post is my review of Parmy Olson's Supremacy: AI, ChatGPT, and the Race That Will Change the World. Among many possible AI topics, I focus on the sellout aspect of the book, comparing the AI entrepreneurs' sellouts to big tech to fund their massive compute needs to the sellout decisions that creative writers make to tech companies in exchange for liveable salaries.

The following is a guest post by David Kowalsky continuing his exploration of productivity and time management topics. This post covers his experiences with Cal Newport's Slow Productivity and Oliver Burkeman's Meditations for Mortals, along with practical experiments and advice for technical writers. Kowalsky considers how these philosophies can help technical writers find more sustainable, meaningful approaches to their work.

In this podcast, I chat with Fabrice Lacroix, founder of Fluid Topics, about the evolution of technical communication. Fabrice describes the industry's progression from (1) delivering static, monolithic PDFs to (2) using Content Delivery Platforms (CDPs) that provide dynamic, topic-based information directly to users to (3) developing content not just for human consumption, but for AI agents that will use this knowledge to automate complex tasks and workflows.

Technical writers' attitudes toward AI can be all over the map, from enthusiastic early adoption to cautiously optimistic to complete rejection. In this post, I try to unpack the reasons that lead some writers to believe what they do about AI. Using research from several articles, I look at AI's jagged frontier, the impact of domain expertise, and interaction modes as ways of understanding the influences that lead to different attitudes.

This is a recording of our AI Book Club session discussing Ray Kurzweil's The Singularity is Nearer: When We Merge With AI. You can watch the recording on YouTube, listen to the audio file, read some summary notes, browse discussion questions, and even listen to a NotebookLM podcast (based on the summary). There are 5 people in this book club discussion, and we focus a lot on the topics of acceleration, especially as we see it happening in the workplace. We also weigh in on Kurzweil's techno utopianism and how persuaded we are by the arguments about AGI landing in 2029, the likelihood of machine and biology merging (through nanobots), and more.

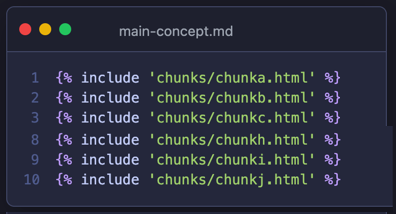

To use AI for fact checking, AI tools might do better with a complete, self-contained set of documentation to check against a reference. Single-sourcing, with its conditional and fragmented content, complicates this model.

In this experiment, I try to implement a loop of recursive self improvement on an essay, but it fails. I gave Gemini a prompt to continuously improve a blog post through 10 iterations, hoping to see exponential quality gains. Instead, the AI's editorial judgment deteriorated over time, resulting in awkward, pretentious writing that was worse than the original.

I'm starting a new series describing the various AI experiements I do. I've been looking for my next area of focus, and I realized that more than anything else, I like experimenting with new tools, techniqes, ideas, etc. So I'm writing a series of posts called AI experiments.

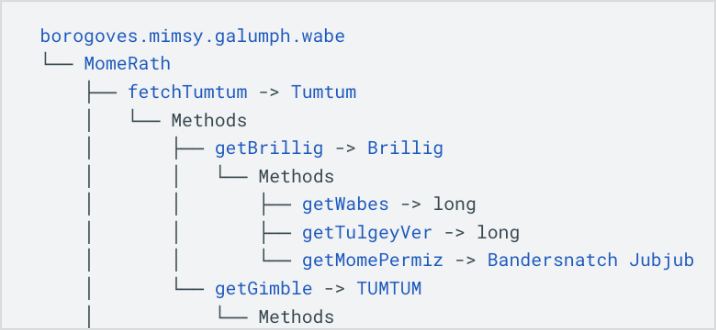

In my prompt engineering series, I added an article exploring how API quick reference guides (QRGs) can improve developer usability and also augment AI chat sessions with much-needed context. These QRGs are structured as hierarchical tree diagrams providing a visual map to complex APIs. The diagrams make it easier for developers to navigate and understand relationships between elements compared to traditional flat reference documentation. The article also includes a step-by-step process for creating these QRGs using AI.

This post describes the key arguments and themes in The Coming Wave: AI, Power, and Our Future, by Mustafa Suleyman, for the AI Book Club: A Human in the Loop. This post not only breaks down the logic but also jumps off into some themes (beyond the book) that might be more tech-writer relevant, such as potential future job titles, areas of focus for tech writers to thrive now, questions for discussion, and more. It also contains the book club recording.

This post captures some of my reflections on attending the 2025 Write the Docs conference in Portland. Some themes I discuss include the paradox of AI fatigue, the delight and difficulty of unconference sessions, why lightning talk formats are so challenging, and more.

In this Q&A with Fabrice Lacroix, founder of Fluid Topics, I ask him questions about his recent tcworld article in which he argues for an innovative, advanced model for Enterprise Knowledge Platforms (EKPs) acting as a central AI-powered brain for all company content, delivered via APIs. Fabrice also outlines a future where tech writers become information architects, governing vast knowledge ecosystems and coaching diverse content contributors.