12 predictions for tech comm in 2026

- 1. AI hype levels off

- 2. The virtuous cycle starts to become apparent

- 3. Hiring will shift from headcount to high-value skills

- 4. More tech products everywhere

- 5. No economy crash

- 6. More AI-powered docs

- 7. NotebookLM as a RAG backend for docs

- 8. OpenAI falls further behind

- 9. Tech writers embrace automation engineering

- 10. China continues to accelerate their AI

- 11. P(doom) talk shifts from AGI subjugation to bad human actors creating WMDs

- 12. Defense becomes AI heavy

- Conclusion

- Related posts

- Works cited

Note: Some content here is AI-assisted.

See also a podcast where I discuss these predictions and others with Fabrizio Ferri-Benedetti: Podcast: Tech comm predictions for 2026 (Phase One)

1. AI hype levels off

In 2026, the hype around AI will level off and a more practical view will take hold. Productivity gains from AI aren’t on an exponential curve. The velocity/productivity boost from AI has a ceiling, and people will start to recognize this. Instead of thinking that entire tech writers can be entirely replaced by AI, companies will adopt a more realistic view that AI, in the hands of a skilled tech writer, can augment their output at most by a factor of 1.5 or so.

When I look at my own changelist stats for the year, I think I see about a 10% increase from the past year (when I was also heavily using AI), but nothing like the massive bump from my pre-AI time. As imperfect as my changelist stats are (version control is easily distorted by site migrations or new API docs), it’s pretty clear that the productivity bump occurred over the last two years (when AI hit the scene), and now things are leveling out. It’s not an unlimited growth curve, as the validation bottlenecks and other manual process overhead limit velocity.

Many tech writers are just getting started on their AI journeys. As they gain familiarity with AI tools (like Antigravity, Gemini 3, or Claude Code), they’ll discover this initial boost. Reports like from index.dev provide some quantitative data around the productivity boost, noting that “developers report that AI tools raise productivity by 10 to 30% on average” (Developer Productivity Statistics with AI Tools 2025.) This aligns (at least conservatively) with my own qualitative experience, though it seems low.

The report adds an interesting nuance to this picture, noting that “When tested, the same developers actually took 19% longer to finish their tasks with AI,” even though “developers still believed they worked 20% faster with AI.” Furthermore, “46% of developers say they don’t fully trust AI outputs.” This paradox suggests that while the perception of velocity is high, the actual output may lag due to the overhead of reviewing and fixing AI generated content.

Because of the distrust of AI outputs, I see more emphasis on review and validation tools, since this is where the bottleneck is. Writers need more confidence that AI outputs are correct, and tools/techniques that can help with this will be in high demand.

2. The virtuous cycle starts to become apparent

In 2026, we’ll start to see the effects of the virtuous cycle of AI adoption. Higher quality docs lead to better code, which leads to more robust tools, which in turn leads to better products. This cycle is powered by usage data: as people use AI-infused products, that data feeds back into the models to improve them, which drives further usage. As Erik Trautman notes that this “ever-growing data set” allows the product to pull ahead at an increasing rate (The Virtuous Cycle of AI Products).

Those companies embracing AI will move faster and do more than those that don’t. It won’t be a rapid trajectory, just a gradual rising of the company’s overall economic ocean and flourishing in slow, steady, impossible-to-dismiss ways. But AI-based companies will start to move faster in a noticeable way.

I’m inclined to believe in the virtuous cycle because I see its impact on my docs. The more accurate my docs are, the easier it is to add new docs and make other updates. Using AI, I’ve made comprehensive API diagrams and other attribute tables that make it easier to understand and review each additional update.

3. Hiring will shift from headcount to high-value skills

In 2026, hiring numbers will likely remain flat for generalist roles, and many tech writers who leave a team might not have their positions backfilled. The market for technical communication will continue to be challenging for those relying solely on traditional writing skills. Companies are under increased pressure to fund the “AI arms race”—buying expensive compute and infrastructure—and to offset those costs, they’re becoming far more selective with headcount.

This trend not only aligns with what I’ve seen in my own team’s dynamic (it’s been years since we expanded our team with an additional person), but also with the “Hiring less, expecting more” trend identified in PwC’s 2025 AI Jobs Barometer. PwC found that while the number of people working in the Information, Communication, and Technology (ICT) sector has nearly doubled since 2012, the share of job postings for these roles has dropped by nearly half. This signals a major shift: companies aren’t flooding job boards to fill seats; they’re hunting for a smaller number of specialized experts.

Data from the World Bank’s Digital Progress and Trends Report 2025 confirms this decoupling. While the broader tech hiring market has softened, demand for specific AI skills has gone up. The report notes that online vacancies requiring Generative AI skills rose ninefold between 2021 and 2024. Further, more than 70% of these high-value AI job postings are located in high-income countries; this signals a consolidation of talent rather than a broad, global expansion.

The takeaway for technical writers is that job security might now hinge on specialization. Generalist roles are fading, but specialized, AI-literate roles are commanding a premium. The market isn’t hiring for more people; it’s hiring for more AI specialists. If you’re looking for a job as a technical writer, specialize in AI and doors will open.

4. More tech products everywhere

In 2026, we’ll see significantly more technology products released. The fundamental nature of technology is that tech begets tech. What are most people doing with their AI tools? They’re writing more code, launching more tools and scripts and apps and frameworks. AI leads to more tech tools, which means more engineers building more tech-based products faster and more prolifically. The pace of change will continue to accelerate, with technology products saturating the economy.

I’ve seen this trend in the precambrian-like prolification of tools in my workplace. There’s a palpable fatigue whenever someone announces yet another tool. Recently I was in an AI-focused tech writer meeting where someone was heralding a new AI tool, and a person raised their hand and said (more or less), “Is this yet another tool to learn?”

This technology saturation isn’t just about more apps; it’s about AI becoming invisible infrastructure at every point in the code/content development journey. As Help Net Security says in a report titled Five identity-driven shifts reshaping enterprise security in 2026, “AI is now embedded at every layer of the organization, from workflows and applications to customer experience, DevOps, IT automation, and strategic decision making.”

I see this daily: when I read a bug, AI is there to summarize it. When I create a new CL, AI is there to read my changes and type a description. When I review code, I can prompt AI to look for errors in the changed files. When I browse the code base, AI is there to interpret it. When I write my weekly report, AI is there to help collect all my week’s highlights. When I go bed at night, AI is there to tuck me in. The AI infrastructure isn’t consolidated in a few apps; it’s spread across the entire codebase, creating a dense, interconnected web of automated agents and tools ready to assist us seemingly everywhere.

5. No economy crash

In 2026, despite widespread fears, the U.S. economy will not crash. The economy will cool off, but I don’t think we’ll see a crash of tech stocks because of the previous point: there’s a precambrian explosion of technology. This influx of general purpose builder tools will accelerate and advance technology across domains, from healthcare to library science to defense.

This influx of technological development across domains is what will keep the economy from crashing. The other domains will absorb AI-generated technologies like a sponge on a watery counter. Tech will be seen as increasingly essential for flourishing in any field and domain you’re in.

Research from Vanguard supports this, noting that AI is poised to become a “transformative economic force” similar to electricity, railroads, and the internet. Their 2026 outlook describes a cycle of “capital deepening”—where the economy swaps old tools for new ones—that is still in its early stages (Vanguard Economic and Market Outlook 2026).

However, Vanguard also warns that “muted expected returns for the technology sector are entirely consistent with… an AI-led U.S. economic boom.” Why? Because of “creative destruction from new entrants.” While the industry of AI will drive the economy forward, today’s tech giants might face fierce competition from agile startups using the very tools they helped create. So, no crash for the economy, but perhaps a reshuffling of the winners and losers in the stock market.

Data from PwC confirms this cross-domain diffusion, showing that outside of the tech sector, Professional Services and Financial Services now have the highest demand for AI skills (AI Jobs Barometer - PwC).

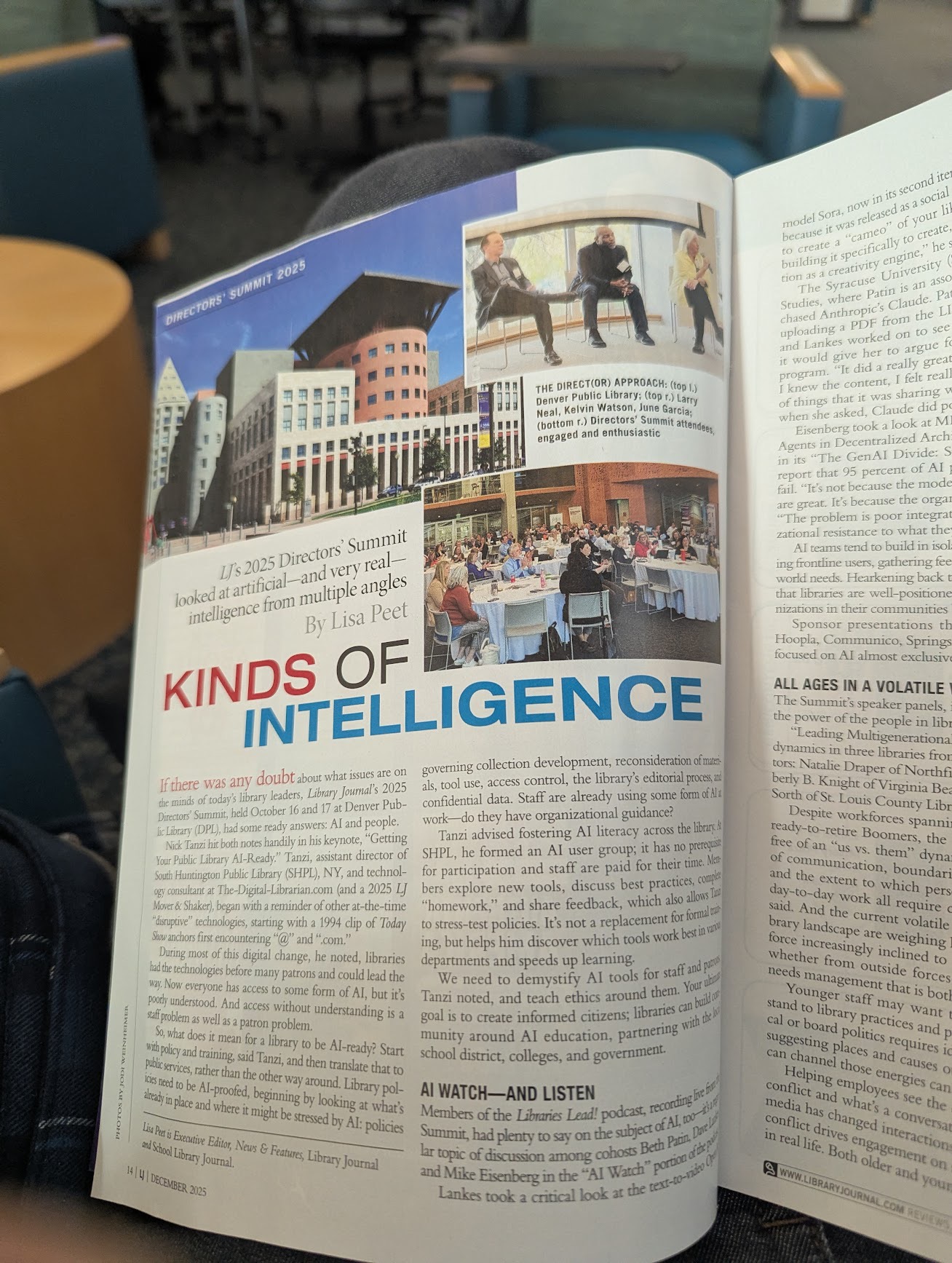

I’m already seeing AI find its way into non-tech domains in everyday life. For example, I was browsing periodicals in my public library the other day and picked up Library Journal (Dec 2025). Immediately I saw an article titled “Kinds of intelligence” focusing on how to make libraries AI-ready.

This isn’t just a random and one-off article; according to a report from Clarivate, “67% of global libraries are now exploring or implementing AI, up from 63% in 2024,” with those integrating AI literacy into formal training moving beyond just evaluation (Clarivate Report: 67% of Libraries Are Adopting AI).

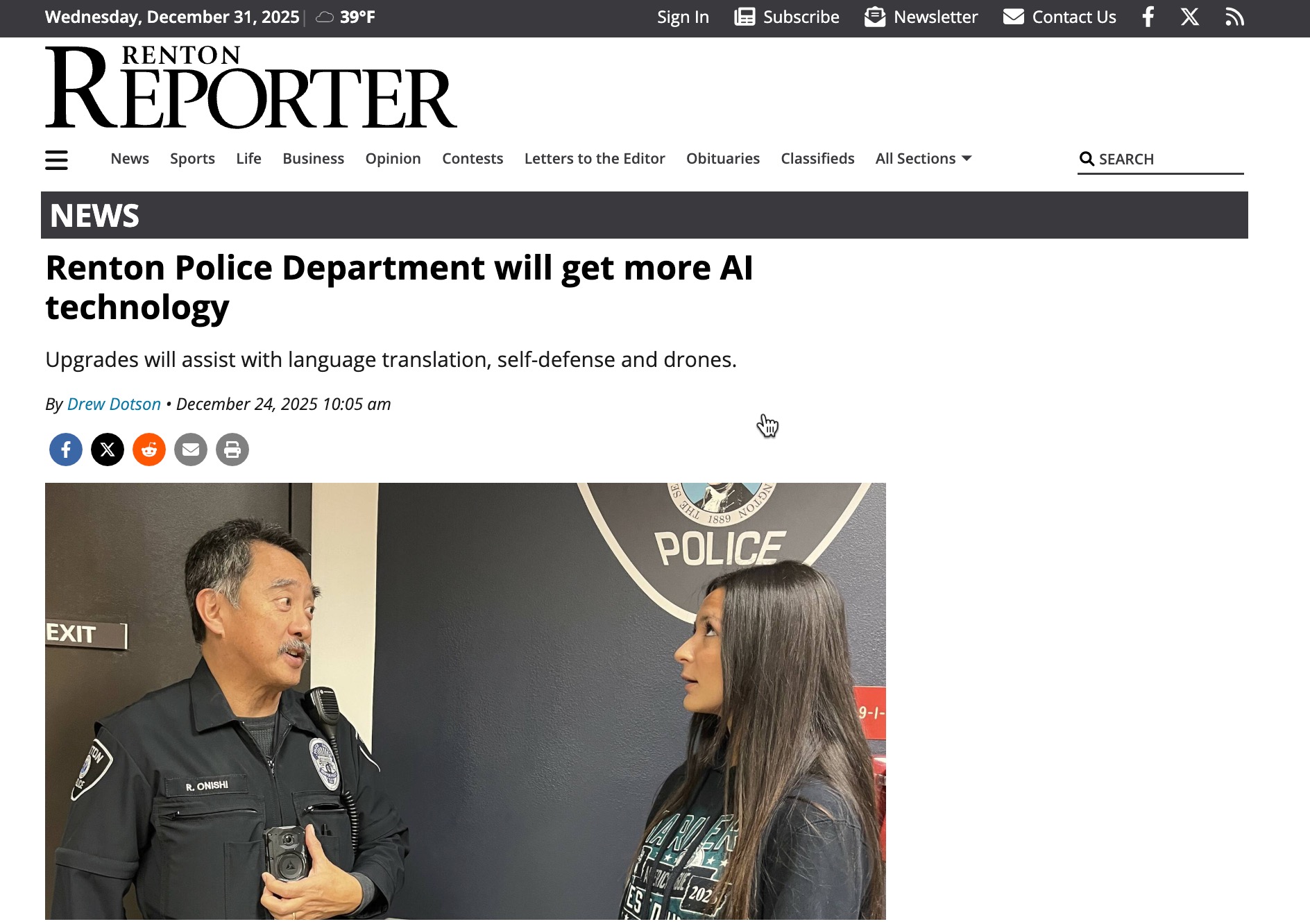

The same day I was in Boon Boona Coffee Shop and picked up the Renton Reporter while waiting in line for coffee. The front page had a story about police officers incorporating AI that will let them translate languages to better interact with diverse community members. The article explained, “At the Dec. 8 council meeting, RPD Deputy Chief Ryan Rutledge touted the new software to body-worn cameras that would enable real-time translation and transcribing capabilities to all officers” (Renton Police Department will get more AI technology).

Many of these domains that have never integrated AI will experience immediate wins and boons, similar to the boost I found writing tech docs. More people across domains will expand their imaginations for how to use AI in ways they previously didn’t consider. Again, this expansion of AI into so many new domains leads me to think the economy won’t crash, even though Ross Sorkin’s 1929 book is #2 on the New York Times nonfiction bestseller list.

6. More AI-powered docs

In 2026, there will be less emphasis on producing beautiful doc sites and more on generating accurate docs and feeding the content into AI-powered sites. This assumption is backed by both personal experience and some internal UX studies, which suggest that most readers frequently interact with AI tools for their information and coding needs.

The shift reflects how I personally use docs now: I actually don’t want to read the docs. I want to copy the docs into an AI tool so that the AI tool can read them and do what I’m trying to do, such as create a complex table filter or call a service.

Users will come to expect documentation sites to offer AI features and conversational capabilities. Interacting with AI will be the norm, and if your doc site doesn’t offer this feature, users will complain. The more users complain, the more writers will invest in AI features for their sites.

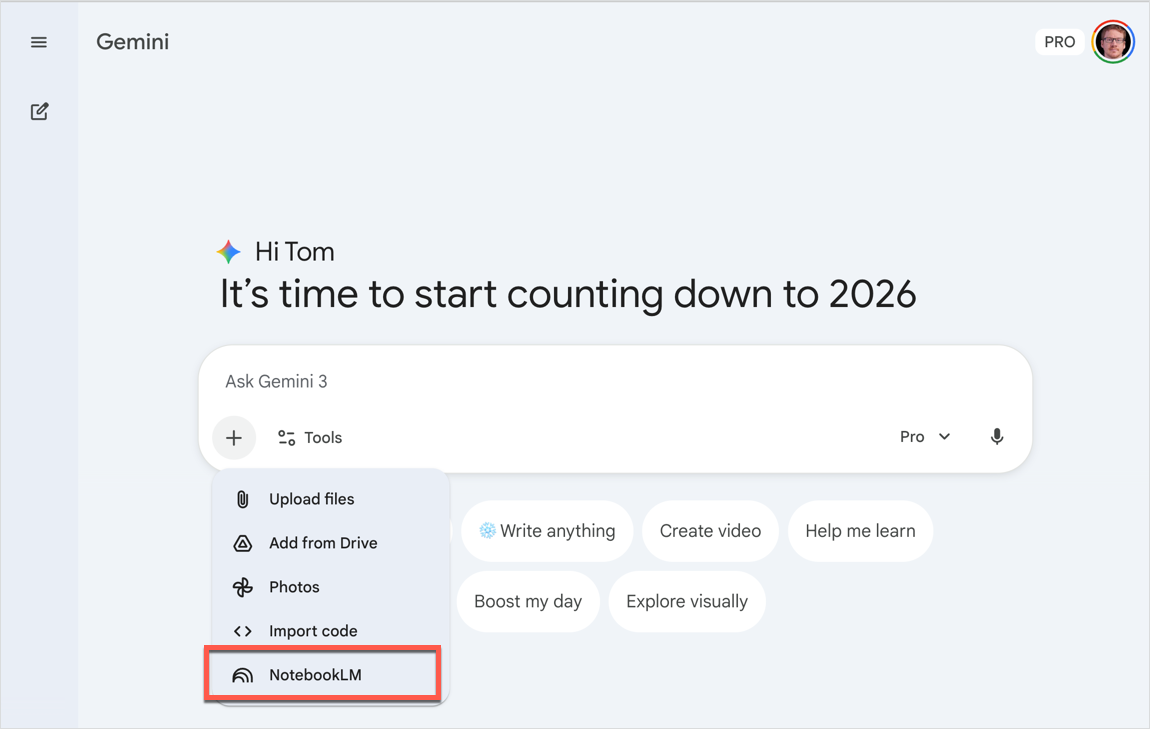

7. NotebookLM as a RAG backend for docs

In 2026, technical writers will increasingly use tools like NotebookLM as the RAG backend for their documentation. This prediction is a bit more far-fetched, but still one that I think might have merit. Developing an MCP server for docs serves only a developer audience, and creating your own custom AI chatbot implementation can be expensive, especially if buying it third-party. For many help sites, especially smaller ones, including startups, or even for ACL’d docs, the authors will resort to easy tools like NotebookLM for an AI-powered documentation experience.

This NotebookLM implementation is actually something I plan to do. Because all my docs are access-controlled, I can’t add them to an MCP server as with public docs. I’m wondering if NotebookLM might provide an easy solution to expose them to users with an AI interface.

After using a few scripts to extract and consolidate my content (as PDFs), I can upload my content into NotebookLM and then expose that Notebook chat to users via an iframe. Additionally, Gemini can use NotebookLM as a data source.

NotebookLM is a tool with a lot of momentum and features. I expect its number of applications to grow. However, users already want to go to large LLMs (Claude, Gemini, ChatGPT) for one-stop information shopping, and these interfaces are too good to switch for a barebones/slow-loading implementation on a custom-built tech doc site. Plus, companies will spend their money on chips, not on UI redesigns/overhauls for doc sites.

8. OpenAI falls further behind

In 2026, OpenAI will continue to lose ground, squeezed by Google in the broader ecosystem and by focused competitors like Claude Code in the enterprise. It seems almost every tech professional I talk to outside my work uses Claude Code instead of ChatGPT. As a Googler I’m deep in the Gemini ecosystem and love it. I admit my bias here, as Gemini is my daily driver at work, but when you start providing an ecosystem or infrastructure of tools working together, it’s hard not to embrace Gemini.

To give an example of the ecosystem’s advantages, the other day I wanted a new task management app to replace Todoist. Upon realizing that I could interact with Google Tasks and Google Keep through Gemini (because they’re workspace apps), including implementing a Productivity Planner gem for workflows that draw upon the data, I abandoned any exploration into purchasing Notion or TickTick. It was a no-brainer.

The data supports the shift. While Google’s integrated ecosystem is driving growth in the broader market, with Gemini’s monthly users nearly doubling to 650 million by October (AI Market Share - ChatGPT’s Dominance Is Crumbling), OpenAI is facing a threat in the high-value B2B sector. The xpert digital report notes that “in the enterprise segment, Claude has overtaken OpenAI with 32 percent, which has fallen back to 25 percent.” While Google has an advantage in breadth and integration, Claude has popularity with more specialized enterprise utility. OpenAI is caught in the middle.

The business models among AI companies are also different. OpenAI has burned through billions on research and infrastructure, with reports suggesting it “has committed over $1.4 trillion in infrastructure obligations” over the next eight years (How 2025 is the year Google made up for its ‘2024 AI mistake’). OpenAI relies heavily on venture capital funding to survive. In contrast, Google’s AI operations are fueled by the profits from its core businesses—YouTube, Search, and Ads. This financial stability allows Google to play the long game.

Meanwhile, in conversations I have with many non-tech people, it seems that OpenAI risks pulling a bait-and-switch: promising high-minded goals but delivering entertainment instead. Vanessa Wingårdh notes in her YouTube video AI Was Supposed to Cure Cancer - We Got This Instead: “Sam Altman claims he needs $7 trillion to cure cancer. Instead, OpenAI launched Sora - an AI TikTok clone generating fake humans doing fake things while everyday people pay for it through higher electricity bills.”

Despite Gemini’s upward trajectory and Claude’s enterprise win, ChatGPT still benefits from a massive first-mover advantage. Regional data shows that ChatGPT maintains “dominant positions between 78 and 83 percent” in major markets like the US, Germany, and Japan (AI Market Share - ChatGPT’s Dominance Is Crumbling). Even in China, where access is restricted, ChatGPT holds a commanding lead of around 79%. So while Google is gaining ground globally and Claude is winning the enterprise, OpenAI remains the default for most of the world.

9. Tech writers embrace automation engineering

In 2026, tech writers will embrace “automation engineering” and develop more scripts to automate their workflows. If 2024 was about prompt engineering, and 2025 was about agentic workflows, I think 2026 could be about automation engineering. As a tech writer, I’m focusing on ways to strategically break down my tasks into chunks and then orchestrate those chunks into deterministic scripts, if possible. For those tasks that can’t be scripted deterministically, I’m orchestrating them using a series of prompts, as much as possible imitating a script-like workflow.

Lately I’ve been fantasizing about becoming an “automation engineer” with docs, but to be honest, I might be overstating how many repeatable processes I actually have outside of release notes. In writing many documentation updates with AI, I incorporate a tremendous amount of back-and-forth—a kind of “cyborg” workflow where I guide the AI, it generates text, I correct it, and we iterate. This kind of iteration would be hard to script.

But even if a process isn’t fully automated, shifting my mindset toward engineering that interaction is key. If I can cut in half the time I spent writing release notes (and making doc updates based on those new features), I can free up time to knock out all my other bugs and get down to bug zero, which is a goal I had in 2025 but never reached.

This shift toward automation isn’t just a personal preference; it reflects a broader economic reality. A 2025 McKinsey report titled (AI: Work partnerships between people, agents, and robots) highlights that “currently demonstrated technologies could, in theory, automate activities accounting for about 57 percent of US work hours today.” This figure suggests that the potential for automation in our roles is far higher than we might assume.

10. China continues to accelerate their AI

In 2026, China will accelerate its AI capabilities, creating massive political tension that will prompt Western leaders to remove guardrails. We’re entering a dangerous period where the fear of “losing” to China overrides the fear of AI safety. Research confirms that China is catching up. In 2024, China’s progress on large language models “narrowed the performance gap between China’s models and the U.S. models currently leading the field,” according to a Pentagon report (New Pentagon report on China’s military notes Beijing’s progress on LLMs).

This narrowing gap creates a perverse incentive structure. As P(doom) concerns and the threat of AI-driven catastrophe increase, the geopolitical competition with China paradoxically prompts the U.S. to lower its defenses to maintain velocity. Without this competitor, we might be more inclined to implement strict regulations and safety checks. But with a near-peer rival breathing down our necks, we’re effectively dropping regulation to compete, creating a race to the bottom where speed is prioritized over safety.

Dropping our guardrails on AI might be a strategic miscalculation. Brian Tse notes in China Is Taking AI Safety Seriously. So Must the U.S. that Beijing isn’t necessarily playing by the “move fast and break things” rulebook when it comes to AI safety. Tse says that Chinese leadership views safety not as a hindrance, but as a foundational requirement for sustainable development. China’s top tech official, Ding Xuexiang, said poetically at Davos in January 2025: “If the braking system isn’t under control, you can’t step on the accelerator with confidence.” For Chinese leaders, safety isn’t a constraint; it’s a prerequisite. If the U.S. abandons its braking system in a panic to accelerate, we might be the ones who crash.

How will China’s AI acceleration affect technical writers? I’m not sure, but perhaps we’ll see more AI tools without the guardrails or safety checks. Maybe AI tools will become more dangerous, and the face-to-face confrontation with AI’s real dangers will prompt us to take the halting actions that Eliezer Yudkowsky and Nate Soares, persuaded that advanced AI will lead to human annihilation, advocate for in If Anyone Builds It, Everyone Dies.

As tech writers, we might specialize in writing for catastrophes. I’m personally fascinated by end-of-world dystopian scenarios and cataclysmic events (not sure why). Maybe this an interest I can build upon?

11. P(doom) talk shifts from AGI subjugation to bad human actors creating WMDs

In 2026, the sentient AI P(doom) conversation will shift from fears of sentient AI to fears of bad human actors using AI. We won’t see AI becoming sentient or embracing malicious motives, but the conversation about risk could get serious in a different way. I think the high-percentage “sentient AI” P(doom) talk (that is, probability of doom from sentient AI) will fizzle, as people realize that AI isn’t becoming its own conscious, independent actor manipulating its way toward world domination.

This skepticism is shared by experts like philosopher Bernardo Kastrup, who argues that we have no reason to believe silicon computers will ever become conscious. He compares the belief in conscious AI to believing in the “Flying Spaghetti Monster”—it’s logically coherent but lacks any empirical basis AI won’t be conscious, and here is why.

Kastrup’s critique goes deeper than just mocking the idea; he argues that brains and computers are fundamentally different substrates. Brains don’t just “run software” on “hardware”; they’re a unified physical system where structure and function are inseparable. Believing that a large language model, which is just manipulating symbols, can suddenly “wake up” is, in his view, a category error.

Consequently, the narrative might be shifting. If Kastrup is right, and AI can’t become conscious because consciousness is a physical phenomenon, then the “P(doom)” conversation might shift from sci-fi to national security. Brian Tse argues that the real threat might not be a superintelligent AI subjugating humanity, but rather bad actors using AI to engineer biological or nuclear catastrophes China Is Taking AI Safety Seriously. So Must the U.S..

Tse says that “President Trump’s AI Action Plan warns that AI may ‘pose novel national security risks in the near future,’ specifically in cybersecurity and in chemical, biological, radiological, and nuclear (CBRN) domains.” It points out that China is also prioritizing safety standards to address “loss of control risks.” In short, as time progresses and we don’t see autonomoous, conscious/sentient AI develop, we’ll worry less about computers enslaving us, and more about AI allowing humans to exert outsized influence on weapons of mass destruction or other global catastrophes.

12. Defense becomes AI heavy

In 2026, defense will become heavily AI-dependent. I don’t have any predictions about what happens on the world scene militarily, only that the military will continue to become more AI-dependent. Does the Russia-Ukraine war drag on? Does the US invade Venezuela? Does China take over Taiwan? Does a nuclear submarine start WWIII? I don’t know. I’m almost finished with Annie Jacobsen’s Nuclear War: A Scenario, and have been watching Fallout online. It seems like the number of potentially world-ending scenarios is stacking up.

This democratization of power is specifically dangerous in cybersecurity. A report from Help Net Security, Five identity-driven shifts reshaping enterprise security in 2026, notes that AI has lowered the barrier to entry for high-impact attacks. Smaller nations and proxy groups no longer need elite armies; they can use AI to do the following:

- Weaponize stolen credentials at scale

- Generate synthetic personas and deepfakes for influence operations

- Automate reconnaissance and vulnerability exploitation

- Target critical infrastructure remotely using AI-assisted tactics

It’s not just adversaries who are gearing up; the US military is rapidly integrating AI into its own infrastructure. Recognizing that AI has tremendous applications for military decision-making and operations, the Pentagon launched a new platform called GenAI.mil to deliver commercial AI options directly to its workforce. They’re even partnering with Elon Musk’s xAI to integrate the Grok chatbot, enabling personnel to securely handle “Controlled Unclassified Information (CUI) in daily workflows” and gain “real‑time global insights from the X platform” (Jon Harper, New Pentagon report on China’s military notes Beijing’s progress on LLMs). This shift underscores how AI is becoming essential infrastructure for the armed forces, not just a weapon to be feared.

For tech comm, this likely means more job opportunities in the defense sector, but also a greater need for clearance and specialized knowledge.

Conclusion

In sum, in 2026, the AI hype will likely level off, but the virtuous cycle of better tools leading to better products will become undeniable. Hiring will remain flat as companies seek specialized AI talent over generalists, and tech will saturate every corner of the economy. While the economy won’t crash, AI-powered docs and tools like NotebookLM will transform how we consume information. OpenAI may slip further behind Google and Anthropic in the enterprise, while tech writers pivot to becoming automation engineers.

Internationally, China will continue its AI acceleration, shifting the P(doom) conversation toward human-driven WMD risks, and defense will become heavily AI-dependent. It’s going to be a year of settling in, specializing, and perhaps finally figuring out how to make all these agents actually work for us. I’m excited and optimistic about 2026. Surprises will surely come, and we’re living in such uncertain times that anything seems likely.

Related posts

See also this predictions post from Fabrizio Ferri-Benedetti on Passo.uno: My day as an augmented technical writer in 2030

Works cited

Clarivate. Clarivate Report: 67% of Libraries Are Adopting AI. TechIntelPro, 31 Oct. 2025.

Delinea. Five identity-driven shifts reshaping enterprise security in 2026. Help Net Security, 24 Dec. 2025.

Digital Progress and Trends Report 2025. World Bank, 2025.

Harper, Jon. New Pentagon report on China’s military notes Beijing’s progress on LLMs. DefenseScoop, 26 Dec. 2025.

Index.dev. Developer Productivity Statistics with AI Tools 2025. 24 Nov. 2025.

Kastrup, Bernardo. AI won’t be conscious, and here is why. 2023.

McKinsey Global Institute. AI: Work partnerships between people, agents, and robots. 25 Nov. 2025.

PwC. AI Jobs Barometer. 2025.

Sheppard, Cameron. Renton Police Department will get more AI technology. Renton Reporter, 12 Dec. 2025.

Times of India. How 2025 is the year Google made up for its ‘2024 AI mistake’. 30 Dec. 2025.

Trautman, Erik. The Virtuous Cycle of AI Products. 12 June 2018.

Tse, Brian. China Is Taking AI Safety Seriously. So Must the U.S.. TIME, 13 Aug. 2025.

Vanguard. Vanguard Economic and Market Outlook 2026. Dec. 2025.

Wingårdh, Vanessa. AI Was Supposed to Cure Cancer - We Got This Instead. YouTube, 12 Oct. 2025.

Wolfenstein, Konrad. AI Market Share - ChatGPT’s Dominance Is Crumbling. Xpert.Digital, 28 Dec. 2025.

About Tom Johnson

I'm an API technical writer based in the Seattle area. On this blog, I write about topics related to technical writing and communication — such as software documentation, API documentation, AI, information architecture, content strategy, writing processes, plain language, tech comm careers, and more. Check out my API documentation course if you're looking for more info about documenting APIs. Or see my posts on AI and AI course section for more on the latest in AI and tech comm.

If you're a technical writer and want to keep on top of the latest trends in the tech comm, be sure to subscribe to email updates below. You can also learn more about me or contact me. Finally, note that the opinions I express on my blog are my own points of view, not that of my employer.